Cross-modal search with ImageBind and DocArray

Combine ImageBind with DocArray to implement a cross-modal search system

Background

Meta recently released yet another open-source model, after a series of cool models like LLaMA and SAM.

This time they embrace further multimodal AI with a focus on embeddings with the new ImageBind Model.

We gave it a try and in this blog post, we will show how you can use this cool model along with DocArray to implement a cross-modal search system!

ImageBind

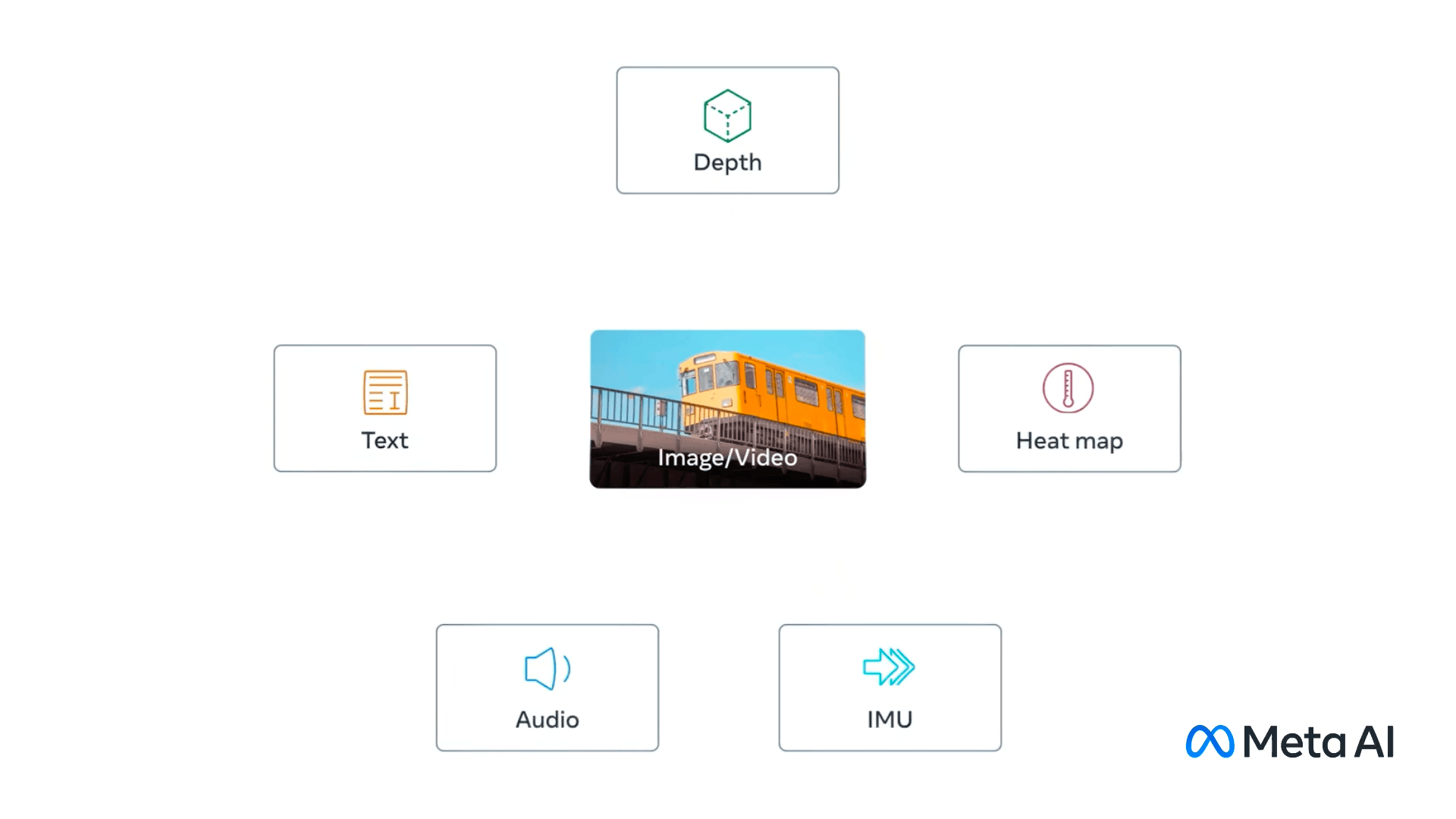

ImageBind is a new embedding model from Meta that is capable of learning a joint embedding across six different modalities - images, text, audio, depth, thermal, and IMU data (inertial measurement units, which track motion and position).

The true power of ImageBind lies in its ability to surpass specialist models that are trained for one specific modality, demonstrating its capability to analyze a multitude of data forms in unison. This holistic approach enables ImageBind to relate objects in an image to their corresponding sounds, 3D shapes, temperatures, and motion patterns, mirroring our human ability to process a complex blend of sensory information.

Moreover, ImageBind demonstrates that it's feasible to create a shared embedding space across multiple modalities without the need for training on data encompassing every possible combination of these modalities. This is a crucial development given the impracticality of creating datasets that include every potential combination of modalities.

Representing different modalities in the same embedding space allows for several use cases, like cross-modal generation, embedding-space generation, or cross-modal retrieval. In this blog, we’re interested in the latter use case and we’ll implement it using DocArray.

DocArray

DocArray is a library for representing, sending, and storing multi-modal data, perfect for Machine Learning applications.

With DocArray you can:

DocArray is also optimized for neural search and supports searching by vector either in-memory or using a variety of vector databases, notably Weaviate, Qdrant, and ElasticSearch.

As we observed more multimodal AI models, we reshaped DocArray to become more suitable for multimodal AI use cases: multimodal AI search and generation.

Since the new release (v0.30.0), you can enjoy flexible schemas, more multimodal datatypes, and native support for multimodal neural search.

Let’s see it in action with ImageBind!

Cross-modal search with DocArray

First, we need to install DocArray:

pip install docarray

Next, we need to install ImageBind. We can do so by cloning the repository:

git clone https://github.com/facebookresearch/ImageBind

pip install -r ImageBind/requirements.txt

Once we install the dependencies, we can load the ImageBind model:

import os

os.chdir('ImageBind')

import data

import torch

from models import imagebind_model

from models.imagebind_model import ModalityType

device = "cuda:0" if torch.cuda.is_available() else "cpu"

# Instantiate model

model = imagebind_model.imagebind_huge(pretrained=True)

model.eval()

model.to(device)

We will use pre-defined documents from DocArray for 3 data types:

The predefined documents offer several utilities like data loading, visualization, and built-in embedding.

Let’s first use these documents to implement an embedding function that uses the loaded ImageBind model:

from typing import Union

from docarray.documents import TextDoc, ImageDoc, AudioDoc

def embed(doc: Union[TextDoc, ImageDoc, AudioDoc]):

"""inplace embedding of document"""

with torch.no_grad():

if isinstance(doc, TextDoc):

embedding = model({ModalityType.TEXT: data.load_and_transform_text([doc.text], device)})[ModalityType.TEXT]

elif isinstance(doc, ImageDoc):

embedding = model({ModalityType.VISION: data.load_and_transform_vision_data([doc.url], device)})[ModalityType.VISION]

elif isinstance(doc, AudioDoc):

embedding = model({ModalityType.AUDIO: data.load_and_transform_audio_data([doc.url], device)})[ModalityType.AUDIO]

else:

raise ValueError('one of the modality fields need to be set')

doc.embedding = embedding.detach().cpu().numpy()[0]

return docLet’s quickly try out the in-place embedding function:

doc = ImageDoc(url='.assets/car_image.jpg')

doc = embed(doc)

doc

📄ImageDoc: e679676 ...

╭─────────────────────────────┬────────────────────────────────────────╮

│ Attribute │ Value │

├─────────────────────────────┼────────────────────────────────────────┤

│ url: ImageUrl │ .assets/car_image.jpg │

│ embedding: NdArrayEmbedding │ NdArrayEmbedding of shape (1024,) │

╰─────────────────────────────┴────────────────────────────────────────╯

As we can see, the document has an embedding field generated with shape of (1024,). Now let’s dive into search.

Cross-modal search

Text-to-image

In this blog, we will use the in-memory vector index to perform neural search using the embeddings. However, feel free to check our documentation to find out about vector database integrations.

Let’s create an index for image documents and index some documents:

from docarray.index.backends.in_memory import InMemoryExactNNIndex

image_index = InMemoryExactNNIndex[ImageDoc]()

image_index.index([

embed(doc) for doc in

[ImageDoc(url=img_path) for img_path in [".assets/dog_image.jpg", ".assets/car_image.jpg", ".assets/bird_image.jpg"]]

])

Now, let's search for bird images using text:

match = image_index.find(embed(TextDoc(text='bird')).embedding, search_field='embedding', limit=1) \

.documents[0]

match.url.display()

Text-to-audio

Now let's try a different modality: audio. The process is pretty much the same. First, create an index for audio documents:

from docarray.index.backends.in_memory import InMemoryExactNNIndex

audio_index = InMemoryExactNNIndex[AudioDoc]()

audio_index.index([

embed(doc) for doc in

[AudioDoc(url=audio_path) for audio_path in [".assets/dog_audio.wav", ".assets/car_audio.wav", ".assets/bird_audio.wav"]]

])

Now search for bird sounds by using text:

match = audio_index.find(embed(TextDoc(text='bird')).embedding, search_field='embedding', limit=1) \

.documents[0]

match.url.display()

# Displays the bird sound

Image-to-audio

What’s cool with ImageBind is that you can do image-to-audio search as well. Let’s use the same index and perform one more search query, with an image this time!

# using the same audio_index, find by image:

match = audio_index.find(embed(ImageDoc(url='.assets/car_image.jpg')).embedding, search_field='embedding', limit=1) \

.documents[0]

match.url.display()

# Displays the card soundConclusion

Cross-modal search, made possible with ImageBind and DocArray, is transforming the way we handle and interpret multimodal data. With ImageBind's ability to generate shared embedding spaces across multiple modalities and DocArray's clear and easy API to represent, send, and store this multimodal data, we have demonstrated how to implement a cross-modal search system.

These tools enable you to explore a wide range of use cases and applications. The cross-modal search capabilities we’ve showcased here are just the beginning, and we’re excited to see what you can build and the exciting applications around it.

Check out DocArray’s documentation, GitHub repo, and Discord to find out how to implement multi-modal search systems.

Furthermore, you can check the ImageBind paper and GitHub repo for more details about the model.

Happy searching!