Dify.AI integrates Jina Embeddings for RAG

Dify.AI, a leading open-source platform specialized in creating generative AI applications, is now leveraging Jina Embeddings v2!

Online LLM application development platform Dify.AI has integrated the Jina Embeddings v2 API in its innovative AI toolkit for instant access when building and hosting LLM applications. All you need to do is add your Jina Embeddings API key via their intuitive web interface to get the full power of Jina AI’s industry-leading embedding models in your RAG (retrieval-augmented generation) applications.

Integrating Embeddings in RAG

Current AI architectures today have no direct way to integrate outside information sources. The model itself encodes information from its training data with varying levels of accuracy, and it is impractical to retrain the model every time there is new, potentially useful data that could be incorporated into it.

For example, I asked JinaChat a question about current events:

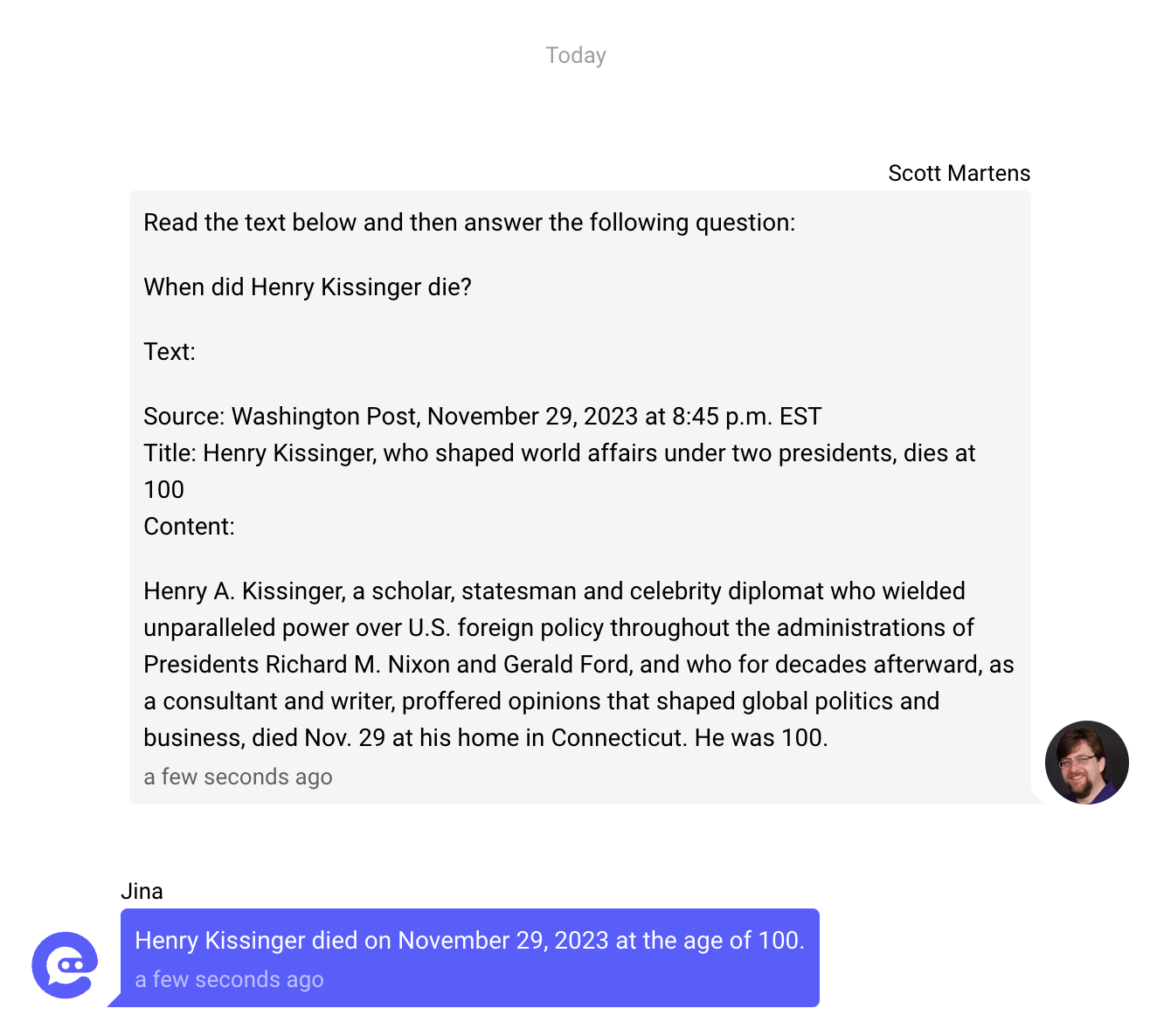

The only way to ensure that an LLM has the information needed to answer a factual question is to provide it in the prompt:

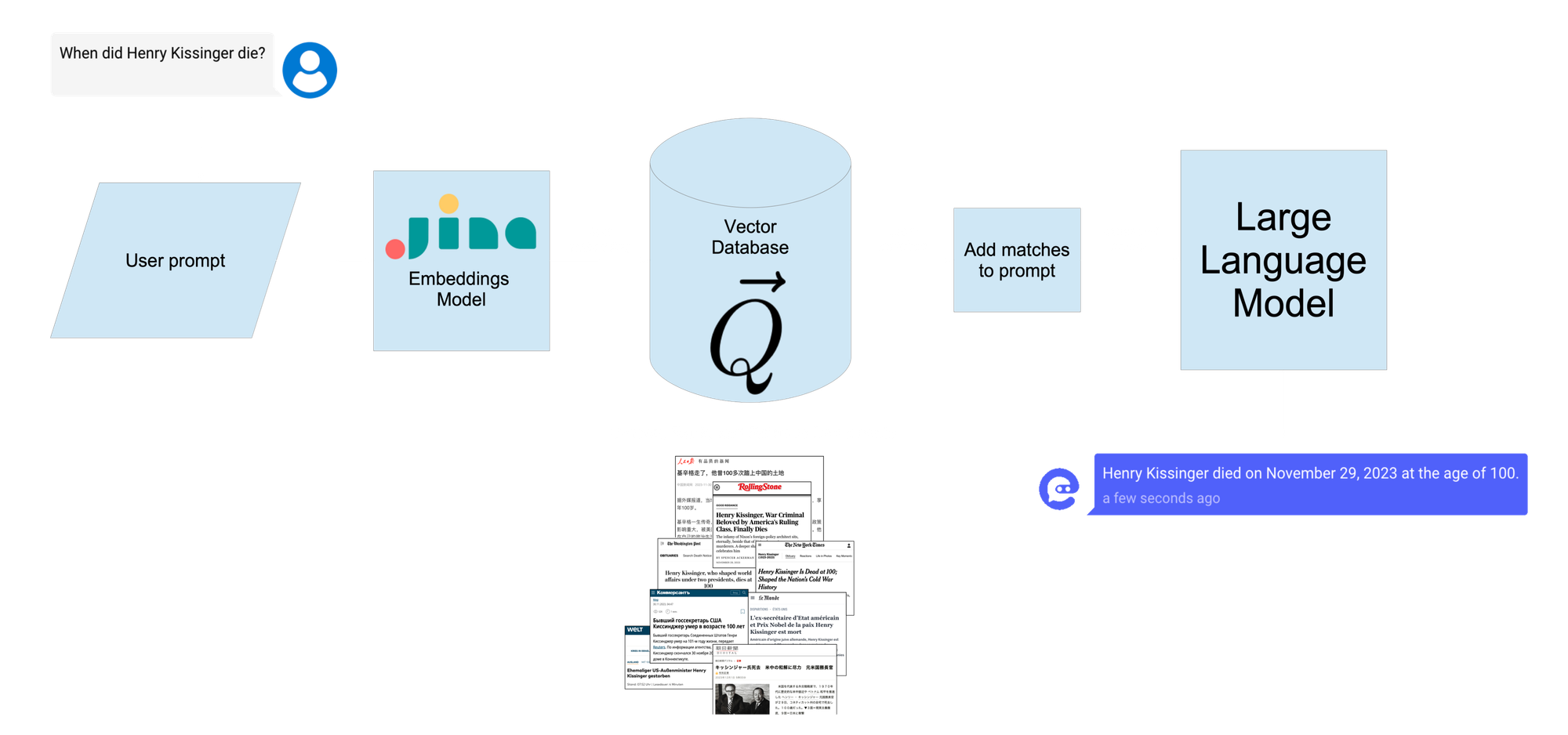

Naturally, an LLM that only answers questions correctly if you include the answer in your question isn’t very useful. This has led to a body of techniques called Retrieval-Augmented Generation or RAG. RAG is a framework installed around an LLM that searches external information sources for materials that might contain the information needed to answer a user’s request and then presents them, with the user’s prompt, to the LLM.

This strategy has the added benefit that LLMs hallucinate much less when they are expected to handle a given text rather than recall things they might partially remember from training.

Leveraging Jina Embeddings Superior Performance

Dify.AI has integrated Jina Embeddings v2 to enhance retrieval quality for RAG prompting. Jina AI’s models provide state-of-the-art accuracy in RAG applications, and with an input window of 8,192 tokens, they can support much larger and more complex questions than most competing models at a much lower price.

You can now use Jina Embeddings in your LLM projects via Dify.AI’s intuitive application builder, as shown in the video below or in the post on Dify.AI's blog:

Get Involved

Check out Dify.AI’s LLM application builder and hosting service for yourself. You can get a free tester token from the Jina AI website to use Jina Embeddings to try it out.

For more information about Jina AI’s offerings, check out the Jina AI website or join our community on Discord.