Do You Truly Need a Dedicated Vector Store?

Unraveling vector search spaghetti: Lucene's charm vs. shiny vector stores. Navigating enterprise mazes & startup vibes. Where's search headed next?

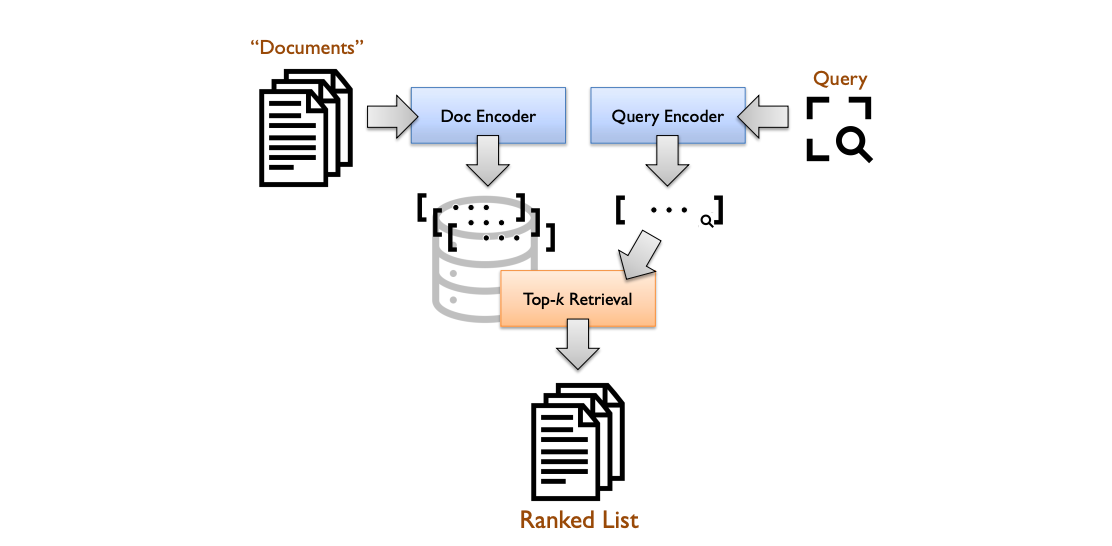

In the rapidly shifting landscape of technology, it's all too easy to be ensnared by the latest buzzwords and narratives. One such prevailing discourse is the perceived necessity for a dedicated "vector store" or "vector database" in the modern AI stack. This notion is propelled by the increasing application of deep neural networks to search, leading many to believe that managing a vast number of dense vectors demands a specialized store. But is this narrative grounded in reality?

Drawing from the insights of the paper "Vector Search with OpenAI Embeddings: Lucene Is All You Need" by Jimmy Lin etc., and enriched by the collective wisdom of the tech community, let's dissect this debate.

Lucene's Role in Modern Vector Search

Lucene, renowned for its inverted index-based search, has long been a cornerstone in the search realm. Critics argue its limitations, particularly in contextual search with fuzzy logic like min edit distance. Yet, Lucene is far from stagnant. As described in the paper, the advent of hierarchical navigable small-world network (HNSW) indexes showcases Lucene's adaptability, proving its prowess in vector search capabilities on par with any dedicated vector store.

It's not just Lucene making waves. Vector indices are now industry staples. Giants like ElasticSearch, Postgresql, and Redis are embracing this trend. Google's recent endorsement of pgvector for their AlloyDB offering underscores the mainstream shift towards vector search.

Enterprise Search Challenges: Navigating the Vector Store Maze

Modern enterprise architectures are intricate tapestries of legacy systems, cutting-edge solutions, and a myriad of tools aimed at solving specific problems. The introduction of a dedicated vector store would not just be a simple addition; it would be a paradigm shift. Historically, enterprises have shown a tendency to lean on tried-and-tested solutions, especially when significant investments have been made. Lucene-based platforms, with their proven track record, offer a sense of reliability. The challenge for enterprises is not just about technology but also about change management, training, and integration. The real question is: Is the potential performance gain worth the upheaval?

Startup Innovations: Beyond the Vector Store Hype

The startup ecosystem thrives on disruption and innovation. The vision of a dedicated vector store is alluring, promising unparalleled performance and capabilities. But in the world of startups, vision needs to be backed by tangible benefits. The reality is that while vector stores offer a new approach, tools like Lucene have been refining their capabilities for years. For startups, the challenge is to prove that their solutions offer not just incremental improvements but transformative changes. In a data-driven world, the onus is on these startups to showcase empirical evidence of their superiority, not just in lab conditions but in real-world scenarios.

Achieving the Ideal: Performance Meets Practicality in Search Solutions

Performance is the holy grail in the world of search. But it's essential to understand that performance doesn't exist in a vacuum. It's intertwined with factors like cost, scalability, and ease of integration. While specialized vector databases might offer a performance edge in specific scenarios, solutions like Lucene bring a balance of performance, reliability, and familiarity. The challenge is to discern when to chase the bleeding edge of performance and when to opt for a more pragmatic, holistic solution. In a world inundated with options, the true art lies in making choices that deliver consistent value over time.

The Future of Vector Search: Lucene vs. Dedicated Stores

The debate surrounding dedicated vector stores versus hybrid Lucene solutions is alive and well. However, a clear sentiment is emerging from the tech community: the inclination towards optimizing what we have rather than chasing the next shiny object. As we push the envelope in search capabilities, it's paramount to remember that often, the optimal solution is already within our grasp.