How to Build Article Recommendations with Jina Reranker API Only

You can build an article recommendation system with just the Jina Reranker API—no pipeline, no embeddings, no vector search, only reranking. Find out how in 20 lines of code.

We introduced the Reranker API two weeks ago, establishing it as a leading reranking solution in the market. Jina Reranker outperforms popular baselines in various benchmarks, demonstrating a significant increase of up to +33% in hit rate over BM25 results. While the performance is impressive, what really excites me is the potential of the Reranker API. Its straightforward interface allows input of a query-doc list and outputs directly the reranked top-k results. This means that, in theory, one could build a search or recommendation system using solely the Reranker—eliminating the need for BM25, embeddings, vector databases, or any pipelines, thus achieving end-to-end functionality.

This concept intrigued me so much that I felt compelled to experiment it. So there you go: now navigating to any news page of our website, such as the one you're currently reading, press the @ key and click on the "get top 5 related articles" button, you'll receive the five articles most relevant to the current one within about 5 seconds, using the jina-reranker-v1 model (slightly longer for the jina-colbert-v1 model). All computations are performed online and managed entirely by the Reranker API. Below is a video demonstration of how it functions:

To run this demo, you will need an API key with enough tokens left. If you exhaust your quota and cannot run the demo, you can generate a new key at https://jina.ai/reranker. Each new key comes with 1 million free tokens.

Implementation

The implementation is very simple: to find the most related articles of an given article on jina.ai/news/, we use the article currently being read as the query and all other 230+ articles (using their full-text!) on our news site as the documents, excluding the current one of course. Then we send this $(q, d_1, d_2, \cdots, d_{230})$ as the payload to the Reranker API. Once the response is received, we use the sorted document index to display the results. Thus, the underlying code is as follows:

const getRecommendedArticles = async () => {

const query = `${currentNews.title} ${currentNews.excerpt}`;

const docs = newsStore.allBlogs.filter((item) => item.slug !== currentNews.slug);

const data = {

model: modelName,

query: query,

documents: docs,

top_n: 5,

}

const rerankUrl = 'https://api.jina.ai/v1/rerank';

const headers = {

'Content-Type': 'application/json',

Authorization: `Bearer ${apiKey}`,

};

const modelName = 'jina-reranker-v1-base-en';

const res = await fetch(rerankUrl, {

method: 'POST',

headers: headers,

body: JSON.stringify(data),

});

const resp = await res.json();

const topKList = resp.results.map((item) => {

return docs[item.index];

});

console.log(topKList);

}

To obtain an API key, simply visit our Reranker API page and navigate to the API section. If you already possess an API key from our Embedding API, you can reuse it here.

And just like that, you'll see the results, which are quite promising for a first iteration, especially considering that the implementation process takes roughly 10 minutes.

While readers may have concerns about this implementation, some critiques may be overthought, while others may be valid:

- Concerns regarding overly long full-text and the necessity of chunking might be overthinking it: the

jina-reranker-v1model can process queries up to 512 in length and documents of arbitrary length, while thejina-colbert-v1model can handle up to 8192 for both queries and documents. Therefore, inputting the full text to the Reranker API is likely unnecessary. Both models efficiently manage long contexts, so there's no need for worry. Chunking, though possibly the most cumbersome and heuristic aspect of the embedding-vector-search-rerank pipeline, is less of an issue here. However, longer contexts do assume more tokens, which is something our API's paid users may need to consider. In this example, because we use the full-text of all 233 articles, one rerank query costs 300K+ tokens. - The impact of raw versus cleaned data on quality. Adding data cleaning could indeed lead to improvements. For instance, we've observed that simply removing HTML tags (i.e.

docs.map(item => item.html.replace(/<[^>]*>?/gm, '')) significantly enhances recommendation quality for thejina-reranker-v1model, though the effect is less pronounced for thejina-colbert-v1model. This suggests that our ColBERT model was trained to be more tolerant of noisy text than thejina-reranker-v1model. - The influence of different query constructions on quality. In the above implementation, we directly used the title and excerpt of the current article as the query. Is this the optimal approach to construct the query? Would adding a prefix such as

"What is the most related article to..."or"I'll tip you $20 if you recommend the best article,"similar to prompts used with large language models, be beneficial? This raises an interesting question, likely related to the training data distribution of the model, which we plan to explore further. - Building on the previous point about query construction, it would be intriguing to investigate the query's compositional abilities further, such as using a user's recent browsing history for personalized recommendations. It's particularly interesting to consider whether the system could understand not just positive examples in the query but also negative ones, e.g.

NOT_LIKEops,"Don't recommend me article like this"or"I want to see fewer like this". We'll delve into this more in the next section.

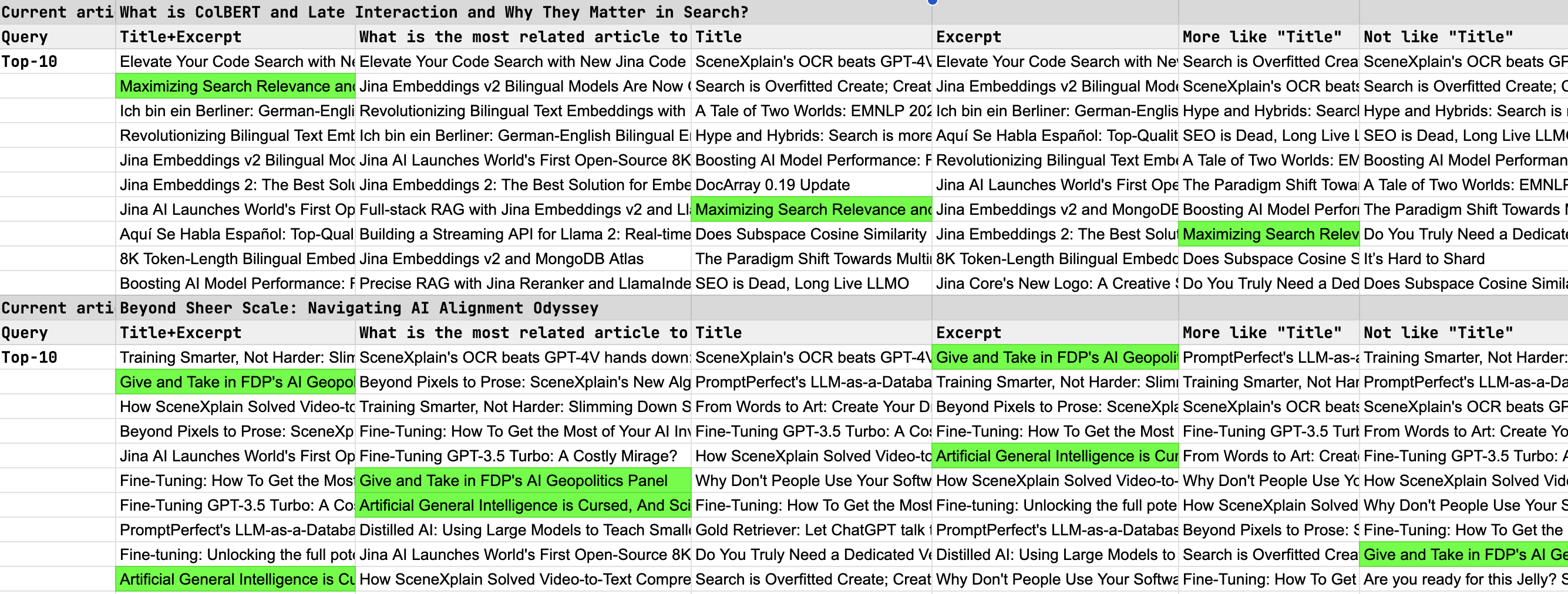

Empirical Study on Query Writing

In our exploration of different query writing with the Jina Reranker API, focusing on the top-10 results, we conducted a qualitative assessment through human labelling (i.e. evaluated by ourselves), which makes sense as we have the full knowledge of all the content published on our website. The strategies in query writings we examined included:

- Using the article's Title, Excerpt, and a combination of Title + Excerpt.

- Adopting "Prompt"-like instructions such as "more like this," "not like this," and "what is the most closely related article?"

To test the reranker's efficacy, we selected two non-trivial articles as our query subjects, aiming to pinpoint the most relevant articles among our extensive catalog of over 200+ posts—a challenge inspired by "the needle in a haystack" in LLMs. Below, we highlighted these "needles" in green for clarity.

Summary

Based on the test results, we've made some observations and summaries:

- Combining the Title and Excerpt yields the best reranking results, with the Excerpt playing a significant role in enhancing reranking quality.

- Incorporating "prompt"-like instructions does not lead to any improvement.

- The reranker model currently does not effectively process positive or negative qualifiers. Terms such as "more like", "less like", or "not like" are not understandable by the reranker.

The insights from points 2 and 3 offer intriguing directions for future enhancements of the reranker. We believe that enabling on-the-fly prompting to changing the sorting logic could significantly expand the reranker's capabilities, unlocking new potential applications such as personalized content curation/recommendation.