Jina CLIP v1: A Truly Multimodal Embeddings Model for Text and Image

Jina AI's new multimodal embedding model not only outperforms OpenAI CLIP in text-image retrieval, it's a solid image embedding model and state-of-the-art text embedding model at the same time. You don't need different models for different modalities any more.

jina-clip-v2 has been released on Nov. 22, 2024!

Jina CLIP v1 (jina-clip-v1) is a new multimodal embedding model that extends the capabilities of OpenAI’s original CLIP model. With this new model, users have a single embedding model that delivers state-of-the-art performance in both text-only and text-image cross-modal retrieval. Jina AI has improved on OpenAI CLIP’s performance by 165% in text-only retrieval, and 12% in image-to-image retrieval, with identical or mildly better performance in text-to-image and image-to-text tasks. This enhanced performance makes Jina CLIP v1 indispensable for working with multimodal inputs.

jina-clip-v1 improves on OpenAI CLIP in every category of retrieval.In this article, we will first discuss the shortcomings of the original CLIP model and how we have addressed them using a unique co-training method. Then, we will demonstrate the effectiveness of our model on various retrieval benchmarks. Finally, we will provide detailed instructions on how users can get started with Jina CLIP v1 via our Embeddings API and Hugging Face.

The CLIP Architecture for Multimodal AI

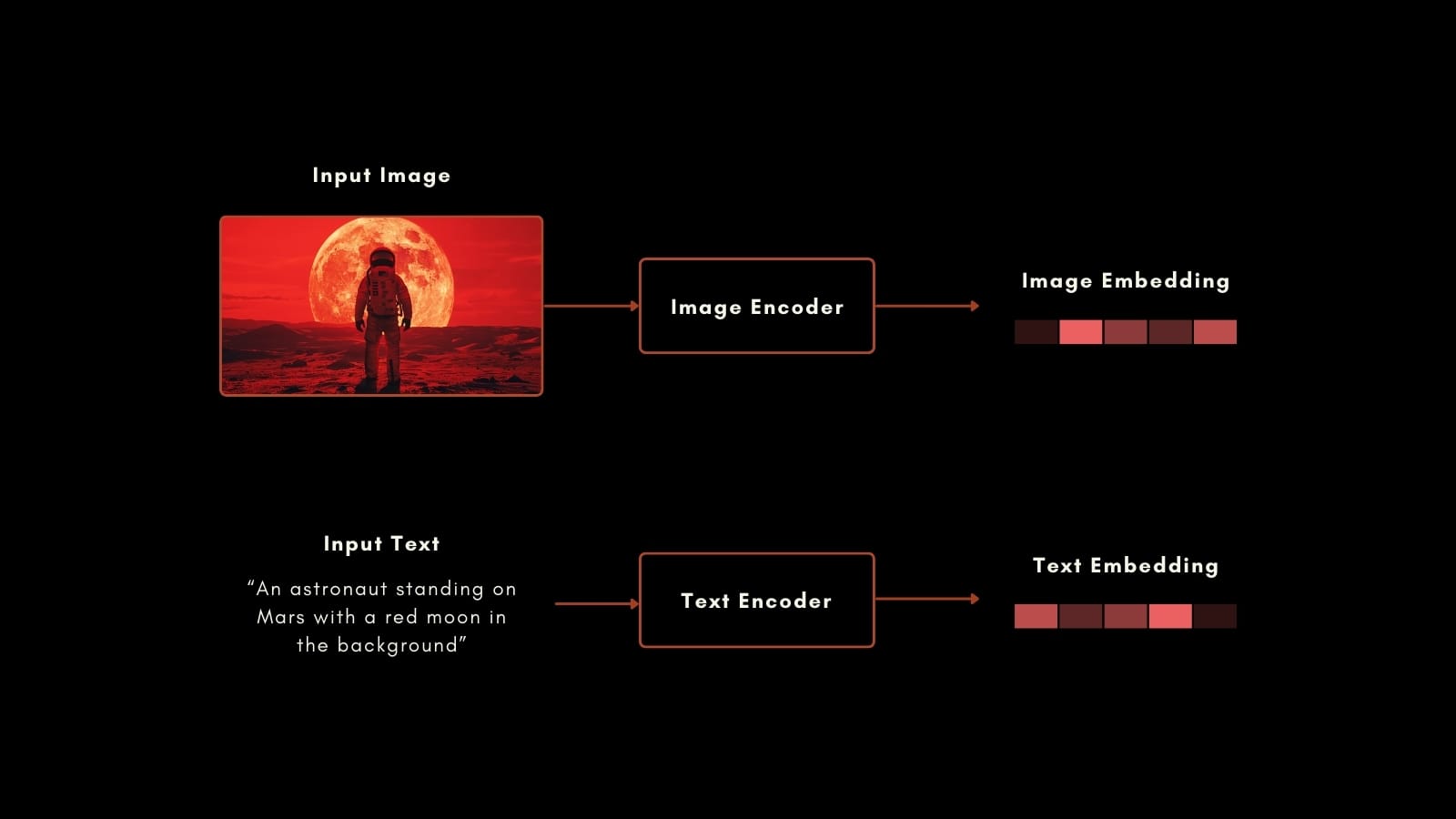

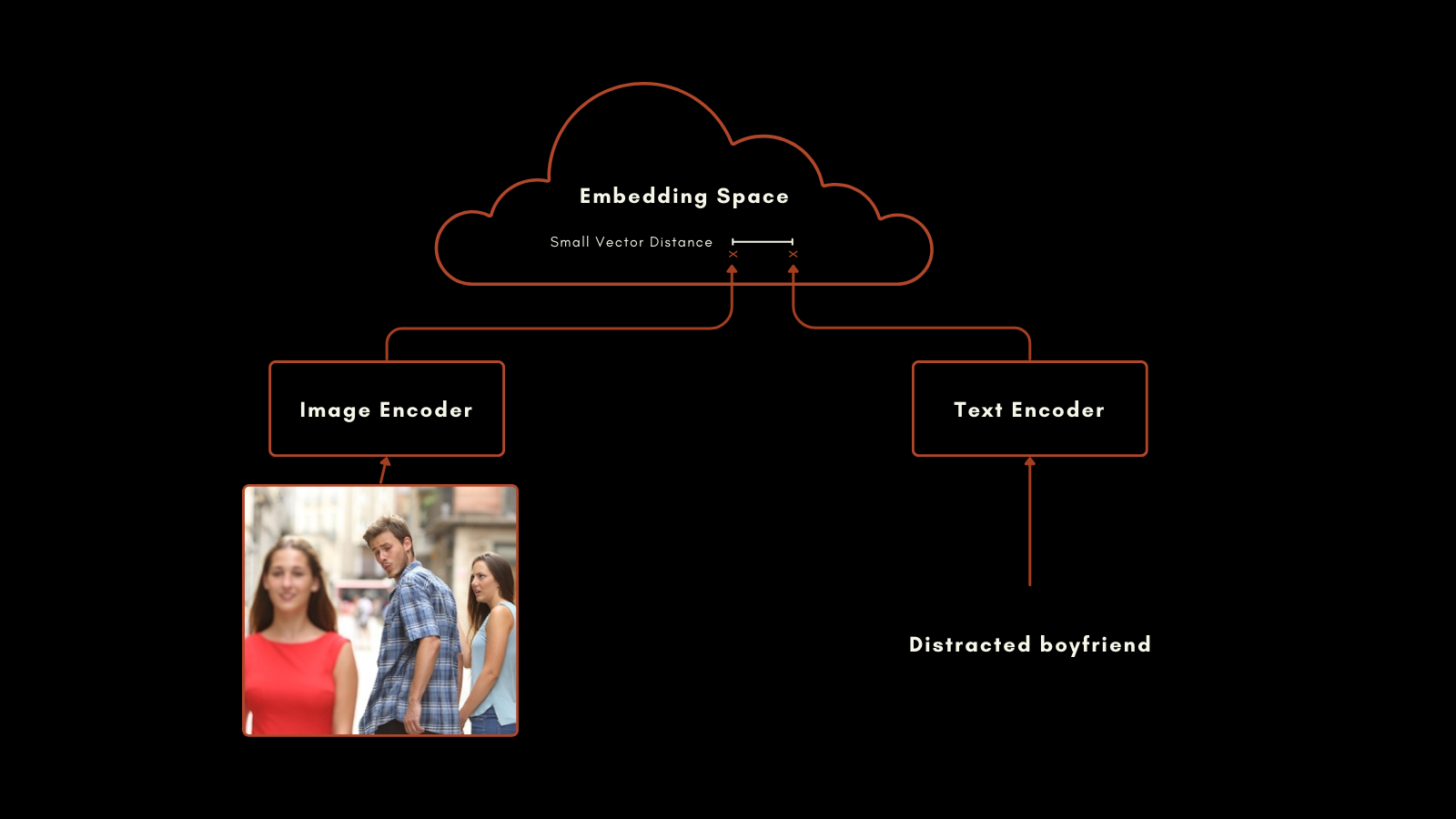

In January 2021, OpenAI released the CLIP (Contrastive Language–Image Pretraining) model. CLIP has a straightforward yet ingenious architecture: it combines two embedding models, one for texts and one for images, into a single model with a single output embedding space. Its text and image embeddings are directly comparable to each other, making the distance between a text embedding and an image embedding proportionate to how well that text describes the image, and vice versa.

This has proven to be very useful in multimodal information retrieval and zero-shot image classification. Without further special training, CLIP performed well at placing images into categories with natural language labels.

The text embedding model in the original CLIP was a custom neural network with only 63 million parameters. On the image side, OpenAI released CLIP with a selection of ResNet and ViT models. Each model was pre-trained for its individual modality and then trained with captioned images to produce similar embeddings for prepared image-text pairs.

This approach yielded impressive results. Particularly notable is its zero-shot classification performance. For example, even though the training data did not include labeled images of astronauts, CLIP could correctly identify pictures of astronauts based on its understanding of related concepts in texts and images.

However, OpenAI’s CLIP has two important drawbacks:

- First is its very limited text input capacity. It can take a maximum 77 tokens of input, but empirical analysis shows that in practice it doesn’t use more than 20 tokens to produce its embeddings. This is because CLIP was trained from images with captions, and captions tend to be very short. This is in contrast to current text embedding models which support several thousand tokens.

- Second, the performance of its text embeddings in text-only retrieval scenarios is very poor. Image captions are a very limited kind of text, and do not reflect the broad array of use cases a text embedding model would be expected to support.

In most real use cases, text-only and image-text retrieval are combined or at least both are available for tasks. Maintaining a second embeddings model for text-only tasks effectively doubles the size and complexity of your AI framework.

Jina AI’s new model addresses these issues directly, and jina-clip-v1 takes advantage of the progress made in the last several years to bring state-of-the-art performance to tasks involving all combinations of text and image modalities.

Introducing Jina CLIP v1

Jina CLIP v1 retains the OpenAI’s original CLIP schema: two models co-trained to produce output in the same embedding space.

For text encoding, we adapted the Jina BERT v2 architecture used in the Jina Embeddings v2 models. This architecture supports a state-of-the-art 8k token input window and outputs 768-dimensional vectors, producing more accurate embeddings from longer texts. This is more than 100 times the 77 token input supported in the original CLIP model.

For image embeddings, we are using the latest model from the Beijing Academy for Artificial Intelligence: the EVA-02 model. We have empirically compared a number of image AI models, testing them in cross-modal contexts with similar pre-training, and EVA-02clearly outperformed the others. It’s also comparable to the Jina BERT architecture in model size, so that compute loads for image and text processing tasks are roughly identical.

These choices produce important benefits for users:

- Better performance on all benchmarks and all modal combinations, and especially large improvements in text-only embedding performance.

EVA-02's empirically superior performance both in image-text and image-only tasks, with the added benefit of Jina AI’s additional training, improving image-only performance.- Support for much longer text inputs. Jina Embeddings’ 8k token input support makes it possible to process detailed textual information and correlate it with images.

- A large net savings in space, compute, code maintenance, and complexity because this multimodal model is highly performant even in non-multimodal scenarios.

Training

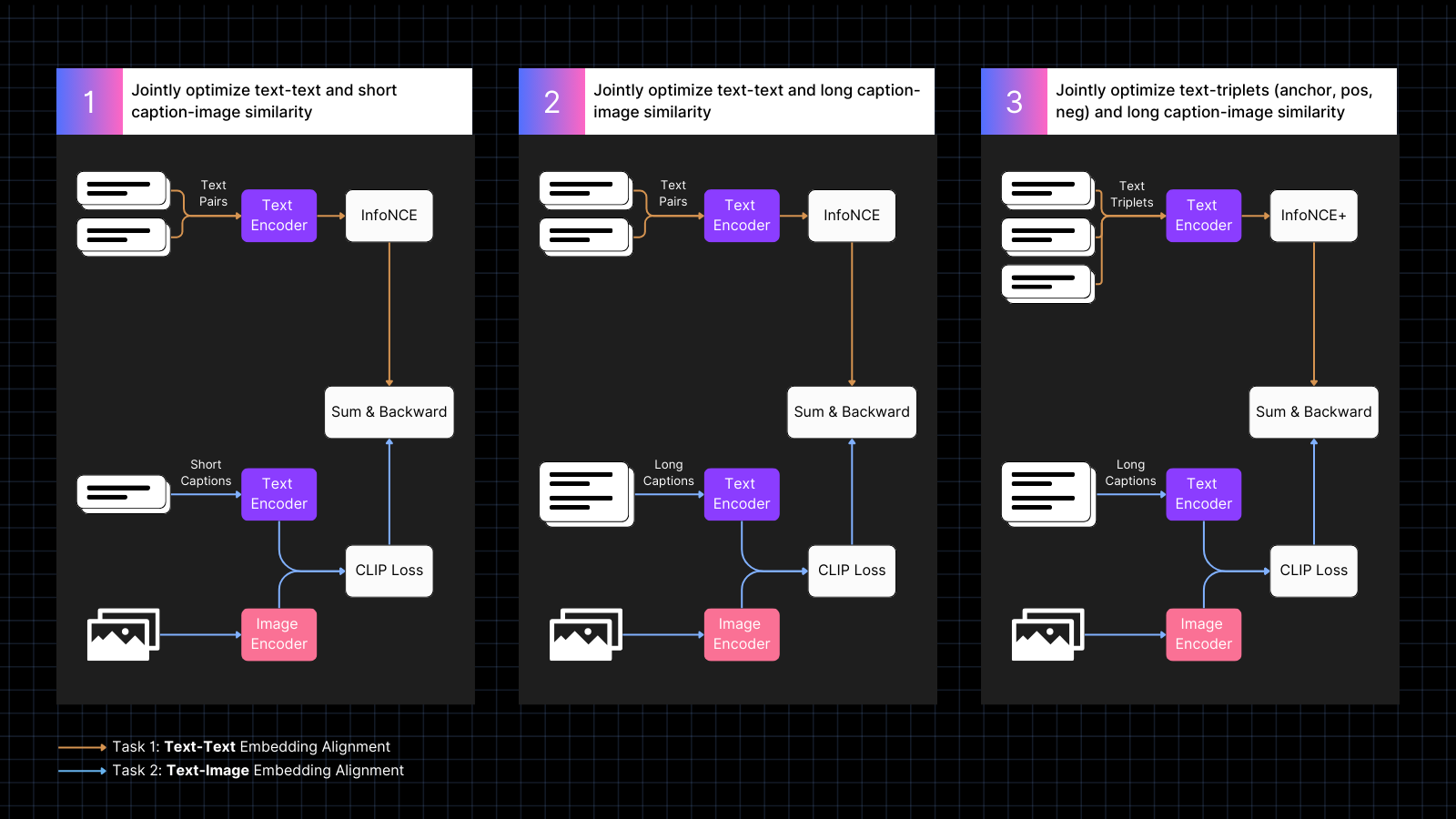

Part of our recipe for high-performance multimodal AI is our training data and procedure. We notice that the very short length of texts used in image captions is the major cause of poor text-only performance in CLIP-style models, and our training is explicitly designed to remedy this.

Training takes place in three steps:

- Use captioned image data to learn to align image and text embeddings, interleaved with text pairs with similar meanings. This co-training jointly optimizes for the two kinds of tasks. The text-only performance of the model declines during this phase, but not as much as if we had trained with only image-text pairs.

- Train using synthetic data which aligns images with larger texts, generated by an AI model, that describes the image. Continue training with text-only pairs at the same time. During this phase, the model learns to attend to larger texts in conjunction with images.

- Use text triplets with hard negatives to further improve text-only performance by learning to make finer semantic distinctions. At the same time, continue training using synthetic pairs of images and long texts. During this phase, text-only performance improves dramatically without the model losing any image-text abilities.

For more information on the details of training and model architecture, please read our recent paper:

New State-of-the-Art in Multimodal Embeddings

We evaluated Jina CLIP v1’s performance across text-only, image-only, and cross-modal tasks involving both input modalities. We used the MTEB retrieval benchmark to evaluate text-only performance. For image-only tasks, we used the CIFAR-100 benchmark. For cross-model tasks, we evaluate on Flickr8k, Flickr30K, and MSCOCO Captions, which are included in the CLIP Benchmark.

The results are summarized in the table below:

| Model | Text-Text | Text-to-Image | Image-to-Text | Image-Image |

|---|---|---|---|---|

| jina-clip-v1 | 0.429 | 0.899 | 0.803 | 0.916 |

| openai-clip-vit-b16 | 0.162 | 0.881 | 0.756 | 0.816 |

| % increase vs OpenAI CLIP |

165% | 2% | 6% | 12% |

You can see from these results that jina-clip-v1 outperforms OpenAI’s original CLIP in all categories, and is dramatically better in text-only and image-only retrieval. Averaged over all categories, this is a 46% improvement in performance.

You can find a more detailed evaluation in our recent paper.

Getting Started with Embeddings API

You can easily integrate Jina CLIP v1 into your applications using the Jina Embeddings API.

The code below shows you how to call the API to get embeddings for texts and images, using the requests package in Python. It passes a text string and a URL to an image to the Jina AI server and returns both encodings.

<YOUR_JINA_AI_API_KEY> with an activated Jina API key. You can get a trial key with a million free tokens from the Jina Embeddings web page.import requests

import numpy as np

from numpy.linalg import norm

cos_sim = lambda a,b: (a @ b.T) / (norm(a)*norm(b))

url = 'https://api.jina.ai/v1/embeddings'

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer <YOUR_JINA_AI_API_KEY>'

}

data = {

'input': [

{"text": "Bridge close-shot"},

{"url": "https://fastly.picsum.photos/id/84/1280/848.jpg?hmac=YFRYDI4UsfbeTzI8ZakNOR98wVU7a-9a2tGF542539s"}],

'model': 'jina-clip-v1',

'encoding_type': 'float'

}

response = requests.post(url, headers=headers, json=data)

sim = cos_sim(np.array(response.json()['data'][0]['embedding']), np.array(response.json()['data'][1]['embedding']))

print(f"Cosine text<->image: {sim}")

Integration with major LLM Frameworks

Jina CLIP v1 is already available for LlamaIndex and LangChain:

- LlamaIndex: Use

JinaEmbeddingwith theMultimodalEmbeddingbase class, and invokeget_image_embeddingsorget_text_embeddings. - LangChain: Use

JinaEmbeddings, and invokeembed_imagesorembed_documents.

Pricing

Both text and image inputs are charged by token consumption.

For text in English, we have empirically calculated that on average you will need 1.1 tokens for every word.

For images, we count the number of 224x224 pixel tiles required to cover your image. Some of these tiles may be partly blank but count just the same. Each tile costs 1,000 tokens to process.

Example

For an image with dimensions 750x500 pixels:

- The image is divided into 224x224 pixel tiles.

- To calculate the number of tiles, take the width in pixels and divide by 224, then round up to the nearest integer.

750/224 ≈ 3.35 → 4 - Repeat for the height in pixels:

500/224 ≈ 2.23 → 3

- To calculate the number of tiles, take the width in pixels and divide by 224, then round up to the nearest integer.

- The total number of tiles required in this example is:

4 (horizontal) x 3 (vertical) = 12 tiles - The cost will be 12 x 1,000 = 12,000 tokens

Enterprise Support

We are introducing a new benefit for users who purchase the Production Deployment plan with 11 billion tokens. This includes:

- One hours of consultation with our product and engineering teams to discuss your specific use cases and requirements.

- A customized Python notebook designed for your RAG (Retrieval-Augmented Generation) or vector search use case, demonstrating how to integrate Jina AI’s models into your application.

- Assignment to an account executive and priority email support to ensure your needs are met promptly and efficiently.

Open-Source Jina CLIP v1 on Hugging Face

Jina AI is committed to an open-source search foundation, and for that purpose, we are making this model available for free under an Apache 2.0 license, on Hugging Face.

You can find example code to download and run this model on your own system or cloud installation on the Hugging Face model page for jina-clip-v1 .

Summary

Jina AI’s latest model — jina-clip-v1 — represents a significant advance in multimodal embedding models, offering substantial performance gains over OpenAI's CLIP. With notable improvements in text-only and image-only retrieval tasks, as well as competitive performance in text-to-image and image-to-text tasks, it stands as a promising solution for complex embeddings use cases.

This model currently only supports English-language texts due to resource constraints. We are working to expand its capabilities to more languages.