Jina Embeddings v2 Bilingual Models Are Now Open-Source On Hugging Face

Jina AI's open-source bilingual embedding models for German-English and Chinese-English are now on Hugging Face. We’re going to walk through installation and cross-language retrieval.

Jina AI has released its state-of-the-art open-source bilingual embedding models for German-English and Chinese-English language pairs via Hugging Face.

In this tutorial, we’re going to walk through a very minimal installation and use case that will cover:

- Downloading Jina Embedding models from Hugging Face.

- Using the models to get encodings from texts in German and English.

- Building a very rudimentary embeddings-based neural search engine for cross-language queries.

We will show you how to use Jina Embeddings to write English queries that retrieve matching texts in German and vice-versa.

This tutorial works the same for the Chinese model. Just follow the instructions in the section (towards the end) titled Querying in Chinese to get the Chinese-English bilingual model and an example document in Chinese.

Bilingual Embedding Models

A bilingual embedding model is a model that maps texts in two languages — German and English in this tutorial, Chinese and English for the Chinese model — to the same embedding space. And, it does it in such a way that if a German text and an English text mean the same thing, their corresponding embedding vectors will be close together.

Models like this are very well suited to cross-language information retrieval applications, which we will show in this tutorial, but can also serve as a basis for RAG-based chatbots, multilingual text categorization, summarization, sentiment analysis, and any other application that uses embeddings. By using models like these, you can treat texts in both languages as if they were written in the same language.

Although many giant language models trained claim to support many different languages, they do not support all languages equally. There are growing questions of bias caused by the dominance of English on the Internet and input sources distorted by the widespread online publication of machine-translated texts. By focusing on two languages, we can better control embedding quality for both, minimizing bias while producing much smaller models with similar or higher performance than giant models that purport to handle dozens of languages.

Jina Embeddings v2 bilingual models support 8,192 input context tokens, enabling them not just to support two languages, but also to support relatively large segments of text compared to comparable models. This makes them ideal for more complex use cases where much more textual information has to be processed into embeddings.

Follow along on Google Colab

This tutorial has an accompanying notebook that you can run on Google Colab, or locally on your own system.

Installing the Prerequisites

Make sure the current environment has the relevant libraries installed. You will need the latest version of transformers, so even if it is already installed, run:

pip install -U transformers

This tutorial will use the FAISS library from Meta to do vector search and comparison. To install it, run:

pip install faiss-cpu

We will also be using Beautiful Soup to process the input data in this tutorial, so make sure it is installed:

pip install bs4

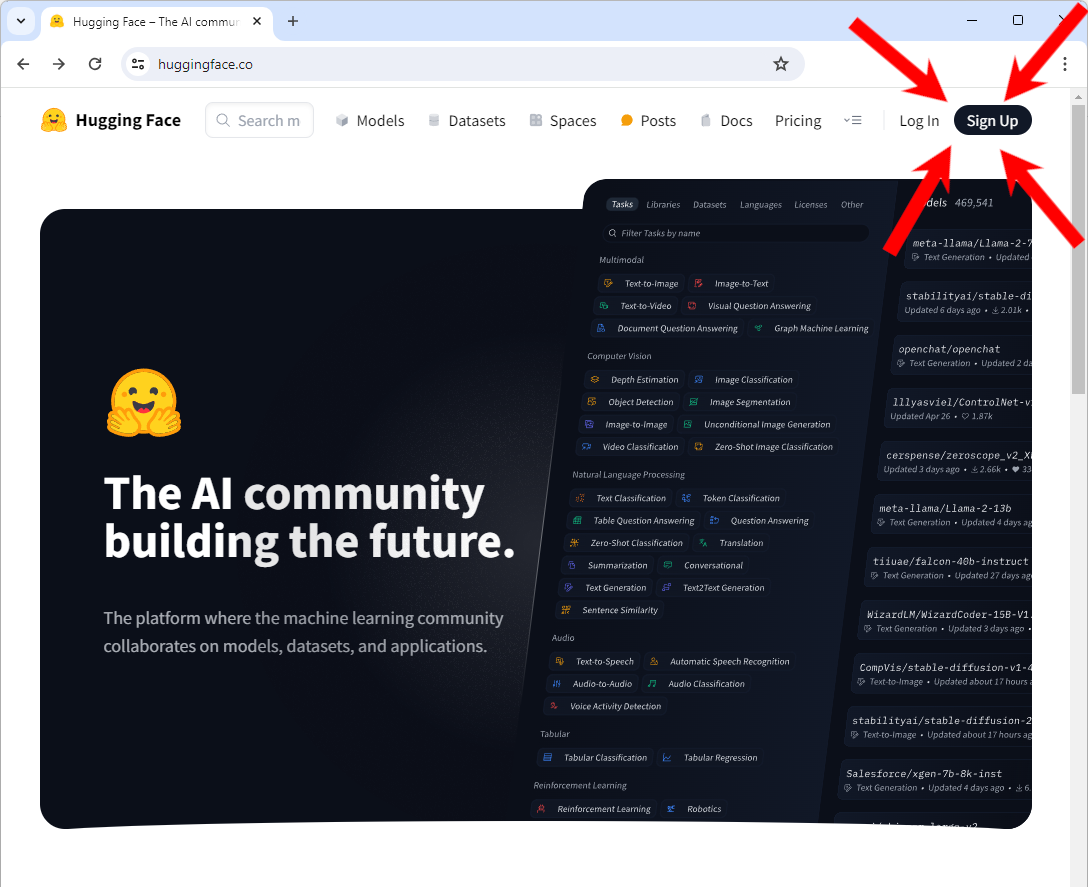

Access to Hugging Face

You will need access to Hugging Face, specifically an account and an access token to download models.

If you do not have an account on Hugging Face:

Go to https://huggingface.co/ and you should see a “Sign Up” button on the upper right of the page. Click it and follow the instructions from there to make a new account.

After you are logged into your account:

Follow the instructions on the Hugging Face website to get an access token.

You need to copy this token into an environment variable called HF_TOKEN. If you’re working in a notebook (on Google Colab, for example) or setting it internally in a Python program, use the following Python code:

import os

os.environ['HF_TOKEN'] = "<your token here>"

In your shell, use whatever the provided syntax is for setting an environment variable. In bash :

export HF_TOKEN="<your token here>"

Download Jina Embeddings v2 for German and English

Once your token is set, you can download the Jina Embeddings German-English bilingual model using the transformers library:

from transformers import AutoModel

model = AutoModel.from_pretrained('jinaai/jina-embeddings-v2-base-de', trust_remote_code=True)

This may take several minutes the first time you do it, but the model will be cached locally after that, so don’t worry if you restart this tutorial later.

Download English-language Data

For this tutorial, we are going to get the English-language version of the book Pro Git: Everything You Need to Know About Git. This book is also available in Chinese and German, which we’ll use later in this tutorial.

To download the EPUB version, run the following command:

wget -O progit-en.epub https://open.umn.edu/opentextbooks/formats/3437This copies the book to a file named progit-en.epub in the local directory.

Alternatively, you can just visit the link https://open.umn.edu/opentextbooks/formats/3437 to download it to a local drive. It is available under the Creative Commons Attribution Non Commercial Share Alike 3.0 license.

Processing the Data

This particular text has an internal structure of hierarchical sections, which we can easily find by looking for the <section> tag in the underlying XHTML data. The code below reads the EPUB file and splits it up using the internal structure of an EPUB file and the <section> tag, then converts each section to plain text without XHTML tags. It creates a Python dictionary whose keys are a set of strings indicating each section’s location in the book, and whose values are the plain text contents of that section.

from zipfile import ZipFile

from bs4 import BeautifulSoup

import copy

def decompose_epub(file_name):

def to_top_text(section):

selected = copy.copy(section)

while next_section := selected.find("section"):

next_section.decompose()

return selected.get_text().strip()

ret = {}

with ZipFile(file_name, 'r') as zip:

for name in zip.namelist():

if name.endswith(".xhtml"):

data = zip.read(name)

doc = BeautifulSoup(data.decode('utf-8'), 'html.parser')

ret[name + ":top"] = to_top_text(doc)

for num, sect in enumerate(doc.find_all("section")):

ret[name + f"::{num}"] = to_top_text(sect)

return ret

Then, run the decompose_epub function on the EPUB file you downloaded before:

book_data = decompose_epub("progit-en.epub")

The variable book_data will now have 583 sections in it. For example:

print(book_data['EPUB/ch01-getting-started.xhtml::12'])

Result:

The Command Line

There are a lot of different ways to use Git.

There are the original command-line tools, and there are many graphical user interfaces of varying capabilities.

For this book, we will be using Git on the command line.

For one, the command line is the only place you can run all Git commands — most of the GUIs implement only a partial subset of Git functionality for simplicity.

If you know how to run the command-line version, you can probably also figure out how to run the GUI version, while the opposite is not necessarily true.

Also, while your choice of graphical client is a matter of personal taste, all users will have the command-line tools installed and available.

So we will expect you to know how to open Terminal in macOS or Command Prompt or PowerShell in Windows.

If you don’t know what we’re talking about here, you may need to stop and research that quickly so that you can follow the rest of the examples and descriptions in this book.

Generating and Indexing Embeddings with Jina Embeddings v2 and FAISS

For each of the 583 sections, we will generate an embedding and store it in a FAISS index. Jina Embeddings v2 models accept input of up to 8192 tokens, large enough that for a book like this, we don’t need to do any further text segmentation or check if any section has too many tokens. The longest section in the book has roughly 12,000 characters, which, for normal English, should be far below the 8k token limit.

To generate a single embedding, you use the encode method of the model we downloaded. For example:

model.encode([book_data['EPUB/ch01-getting-started.xhtml::12']])

This returns an array containing a single 768 dimension vector:

array([[ 6.11135997e-02, 1.67829826e-01, -1.94809273e-01,

4.45595086e-02, 3.28837298e-02, -1.33441269e-01,

1.35364473e-01, -1.23119736e-02, 7.51526654e-02,

-4.25386652e-02, -6.91794455e-02, 1.03527725e-01,

-2.90831417e-01, -6.21018047e-03, -2.16205455e-02,

-2.20803712e-02, 1.50471330e-01, -3.31433356e-01,

-1.48741454e-01, -2.10959971e-01, 8.80039856e-02,

....

That is an embedding.

Jina Embeddings models are set up to allow batch processing. The optimal batch size depends on the hardware you use when running. A large batch size risks running out of memory. A small batch size will take longer to process.

batch_size=5 worked on Google Colab in free tier without a GPU, and took about an hour to generate the entire set of embeddings.In production, we recommend using much more powerful hardware or using Jina AI’s Embedding API service. Follow the link below to find out how it works and how to get started with free access.

The code below generates the embeddings and stores them in a FAISS index. Set the variable batch_size as appropriate to your resources.

import faiss

batch_size = 5

vector_data = []

faiss_index = faiss.IndexFlatIP(768)

data = [(key, txt) for key, txt in book_data.items()]

batches = [data[i:i + batch_size] for i in range(0, len(data), batch_size)]

for ind, batch in enumerate(batches):

print(f"Processing batch {ind + 1} of {len(batches)}")

batch_embeddings = model.encode([x[1] for x in batch], normalize_embeddings=True)

vector_data.extend(batch)

faiss_index.add(batch_embeddings)

When working in a production environment, a Python dictionary is not an adequate or performant way to handle documents and embeddings. You should use a purpose-built vector database, which will have its own directions for data insertion.

Querying in German for English Results

When we query for something from this set of texts, here’s what will happen:

- The Jina Embeddings German-English model will create an embedding for the query.

- We will use the FAISS index (

faiss_index) to get the stored embedding with the highest cosine to the query embedding and return its place in the index. - We will look up the corresponding text in the vector data array (

vector_data) and print out the cosine, the location of the text, and the text itself.

That’s what the query function below does.

def query(query_str):

query = model.encode([query_str], normalize_embeddings=True)

cosine, index = faiss_index.search(query, 1)

print(f"Cosine: {cosine[0][0]}")

loc, txt = vector_data[index[0][0]]

print(f"Location: {loc}\\nText:\\n\\n{txt}")

Now let’s try it out.

# Translation: "How do I roll back to a previous version?"

query("Wie kann ich auf eine frühere Version zurücksetzen?")

Result:

Cosine: 0.5202275514602661

Location: EPUB/ch02-git-basics-chapter.xhtml::20

Text:

Undoing things with git restore

Git version 2.23.0 introduced a new command: git restore.

It’s basically an alternative to git reset which we just covered.

From Git version 2.23.0 onwards, Git will use git restore instead of git reset for many undo operations.

Let’s retrace our steps, and undo things with git restore instead of git reset.

This is a pretty good choice to answer the question. Let’s try another one:

# Translation: "What does 'version control' mean?"

query("Was bedeutet 'Versionsverwaltung'?")

Result:

Cosine: 0.5001817941665649

Location: EPUB/ch01-getting-started.xhtml::1

Text:

About Version Control

What is “version control”, and why should you care?

Version control is a system that records changes to a file or set of files over time so that you can recall specific versions later.

For the examples in this book, you will use software source code as the files being version controlled, though in reality you can do this with nearly any type of file on a computer.

If you are a graphic or web designer and want to keep every version of an image or layout (which you would most certainly want to), a Version Control System (VCS) is a very wise thing to use.

It allows you to revert selected files back to a previous state, revert the entire project back to a previous state, compare changes over time, see who last modified something that might be causing a problem, who introduced an issue and when, and more.

Using a VCS also generally means that if you screw things up or lose files, you can easily recover.

In addition, you get all this for very little overhead.

Try it with your own German questions to see how well it works. As a general practice, when dealing with text information retrieval, you should ask for three to five responses instead of just one. The best answer is often not the first one.

Reversing the Roles: Querying German documents with English

The book Pro Git: Everything You Need to Know About Git is also available in German. We can use this same model to give this demo with the languages reversed.

Download the ebook:

wget -O progit-de.epub https://open.umn.edu/opentextbooks/formats/3454

This copies the book to a file named progit-de.epub. We then process it the same way we did for the English book:

book_data = decompose_epub("progit-de.epub")

And then generate the embeddings the same way as before:

batch_size = 5

vector_data = []

faiss_index = faiss.IndexFlatIP(768)

data = [(key, txt) for key, txt in book_data.items()]

batches = [data[i:i + batch_size] for i in range(0, len(data), batch_size)]

for ind, batch in enumerate(batches):

print(f"Processing batch {ind + 1} of {len(batches)}")

batch_embeddings = model.encode([x[1] for x in batch], normalize_embeddings=True)

vector_data.extend(batch)

faiss_index.add(batch_embeddings)

We can now use the same query function to search in English for answers in German:

query("What is version control?")

Result:

Cosine: 0.6719034910202026

Location: EPUB/ch01-getting-started.xhtml::1

Text:

Was ist Versionsverwaltung?

Was ist „Versionsverwaltung“, und warum sollten Sie sich dafür interessieren?

Versionsverwaltung ist ein System, welches die Änderungen an einer oder einer Reihe von Dateien über die Zeit hinweg protokolliert, sodass man später auf eine bestimmte Version zurückgreifen kann.

Die Dateien, die in den Beispielen in diesem Buch unter Versionsverwaltung gestellt werden, enthalten Quelltext von Software, tatsächlich kann in der Praxis nahezu jede Art von Datei per Versionsverwaltung nachverfolgt werden.

Als Grafik- oder Webdesigner möchte man zum Beispiel in der Lage sein, jede Version eines Bildes oder Layouts nachverfolgen zu können. Als solcher wäre es deshalb ratsam, ein Versionsverwaltungssystem (engl. Version Control System, VCS) einzusetzen.

Ein solches System erlaubt es, einzelne Dateien oder auch ein ganzes Projekt in einen früheren Zustand zurückzuversetzen, nachzuvollziehen, wer zuletzt welche Änderungen vorgenommen hat, die möglicherweise Probleme verursachen, herauszufinden wer eine Änderung ursprünglich vorgenommen hat und viele weitere Dinge.

Ein Versionsverwaltungssystem bietet allgemein die Möglichkeit, jederzeit zu einem vorherigen, funktionierenden Zustand zurückzukehren, auch wenn man einmal Mist gebaut oder aus irgendeinem Grund Dateien verloren hat.

All diese Vorteile erhält man für einen nur sehr geringen, zusätzlichen Aufwand.

This section’s title translates as “What is version control?”, so this is a good response.

Querying in Chinese

These examples will work exactly the same way with Jina Embeddings v2 for Chinese and English. To use the Chinese model instead, just run the following:

from transformers import AutoModel

model = AutoModel.from_pretrained('jinaai/jina-embeddings-v2-base-zh', trust_remote_code=True)

And to get the Chinese edition of Pro Git: Everything You Need to Know About Git:

wget -O progit-zh.epub https://open.umn.edu/opentextbooks/formats/3455

Then, process the Chinese book:

book_data = decompose_epub("progit-zh.epub")

All the other code in this tutorial will work the same way.

The Future: More Languages, including Programming

We will be rolling out more bilingual models in the immediate future, with Spanish and Japanese already in the works, as well as a model that supports English and several major programming languages. These models are ideally suited to international enterprises that manage multilingual information, and can serve as the cornerstone for AI-powered information retrieval and RAG-based generative language models, inserting themselves into a variety of cutting-edge AI use cases.

Jina AI’s models are compact and perform among the best in their class, showing how you don’t need the biggest model to get the best performance. By focusing on bilingual performance, we produce models that are both better at those languages, easier to adapt, and more cost-effective than large models trained on uncurated data.

Jina Embeddings are available from Hugging Face, in the AWS marketplace for use in Sagemaker, and via the Jina Embeddings web API. They are fully integrated into many AI process frameworks and vector databases.

See the Jina Embeddings website for more information, or contact us to discuss how Jina AI’s offerings can fit into your business processes.