Jina Reranker v2 for Agentic RAG: Ultra-Fast, Multilingual, Function-Calling & Code Search

Jina Reranker v2 is the best-in-class reranker built for Agentic RAG. It features function-calling support, multilingual retrieval for over 100 languages, code search capabilities, and offers a 6x speedup over v1.

Today, we are releasing Jina Reranker v2 (jina-reranker-v2-base-multilingual), our latest and top-performing neural reranker model in the family of search foundation. With Jina Reranker v2, developers of RAG/search systems can enjoy:

- Multilingual: More relevant search results in 100+ languages, outperforming

bge-reranker-v2-m3; - Agentic: State-of-the-art function-calling and text-to-SQL aware document reranking for agentic RAG;

- Code retrieval: Top performance on code retrieval tasks, and

- Ultra-fast: 15x more documents throughput than

bge-reranker-v2-m3, and 6x more thanjina-reranker-v1-base-en.

You can get started with using Jina Reranker v2 via our Reranker API, where we are offering 1M free tokens for all new users.

In this article, we'll elaborate on these new features supported by Jina Reranker v2, showing how our reranker model performs compared to other state-of-the-art models (including Jina Reranker v1), and explain the training process that led Jina Reranker v2 to reach top performance in task accuracy and document throughput.

Recap: Why You Need a Reranker

While embedding models are the most widely used and understood component in search foundation, they often sacrifice precision for speed of retrieval. Embedding-based search models are typically bi-encoder models, where each document is embedded and stored, then queries are also embedded and retrieval is based on the similarity of the query’s embedding to the documents’ embeddings. In this model, many nuances of token-level interactions between users’ queries and matched documents are lost because the original query and documents can never “see” each other – only their embeddings do. This may come at a price of retrieval accuracy – an area where cross-encoder reranker models excel.

Rerankers address this lack of fine-grained semantics by employing a cross-encoder architecture, where query-document pairs are encoded together to produce a relevance score instead of an embedding. Studies have shown that, for most RAG systems, use of a reranker model improves semantic grounding and reduces hallucinations.

Multilingual Support with Jina Reranker v2

Back in the days, Jina Reranker v1 differentiated itself by achieving state-of-the-art performance on four key English-language benchmarks. Today, we're significantly extending the reranking capabilities in Jina Reranker v2 with multilingual support for more than 100 languages and cross-lingual tasks!

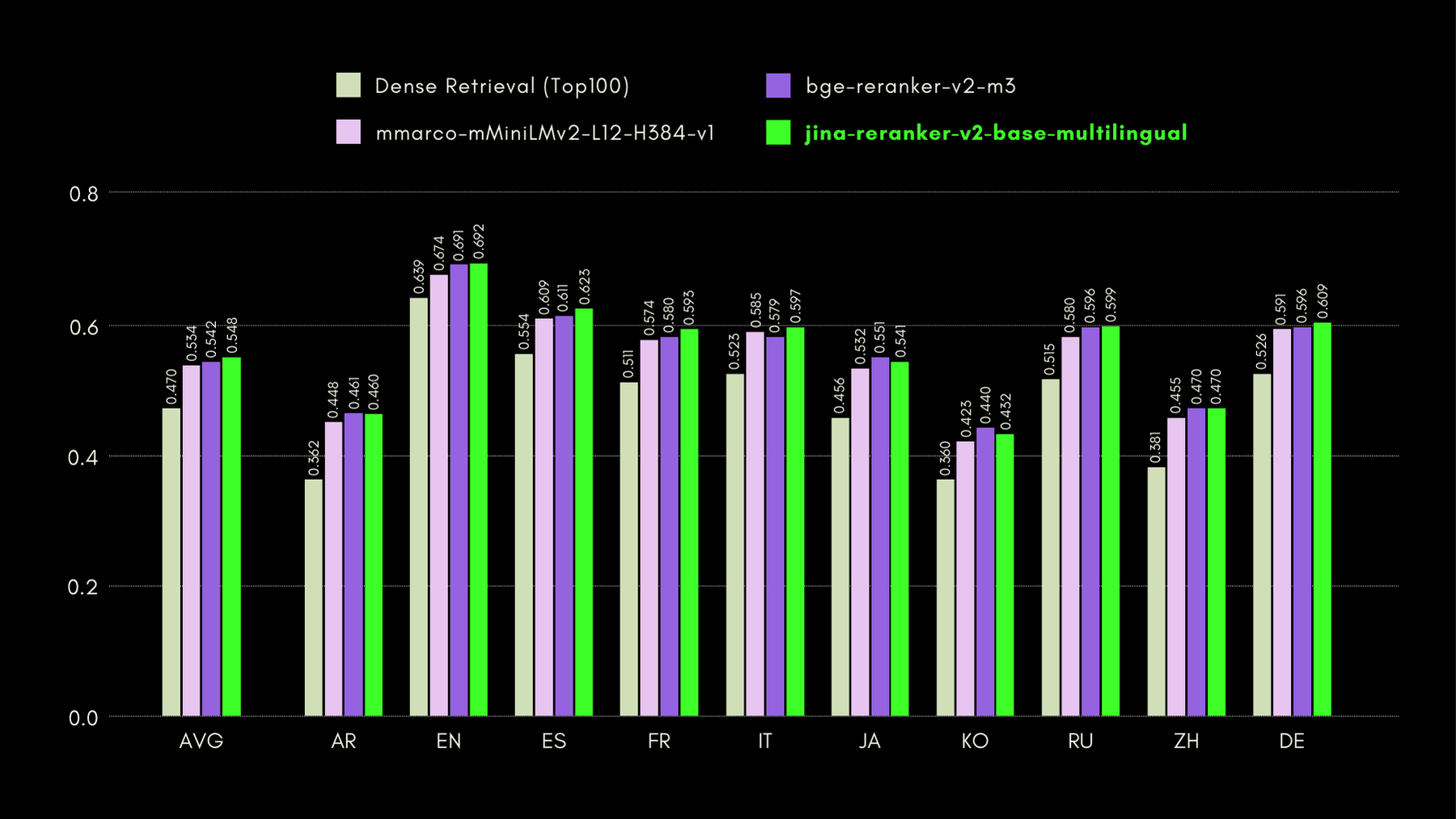

To evaluate the cross-lingual and English-language capabilities of Jina Reranker v2, we compare its performance to similar reranker models, over the three benchmarks listed below:

MKQA: Multilingual Knowledge Questions and Answers

This dataset comprises questions and answers in 26 languages, derived from real-world knowledge bases, and is designed to evaluate cross-lingual performance of question-answering systems. MKQA consists of English-language queries, and their manual translations to non-English languages, together with answers in multiple languages including English.

In the below graph, we report the recall@10 scores for each included reranker, including a “dense retriever” as baseline, performing traditional embedding-based search:

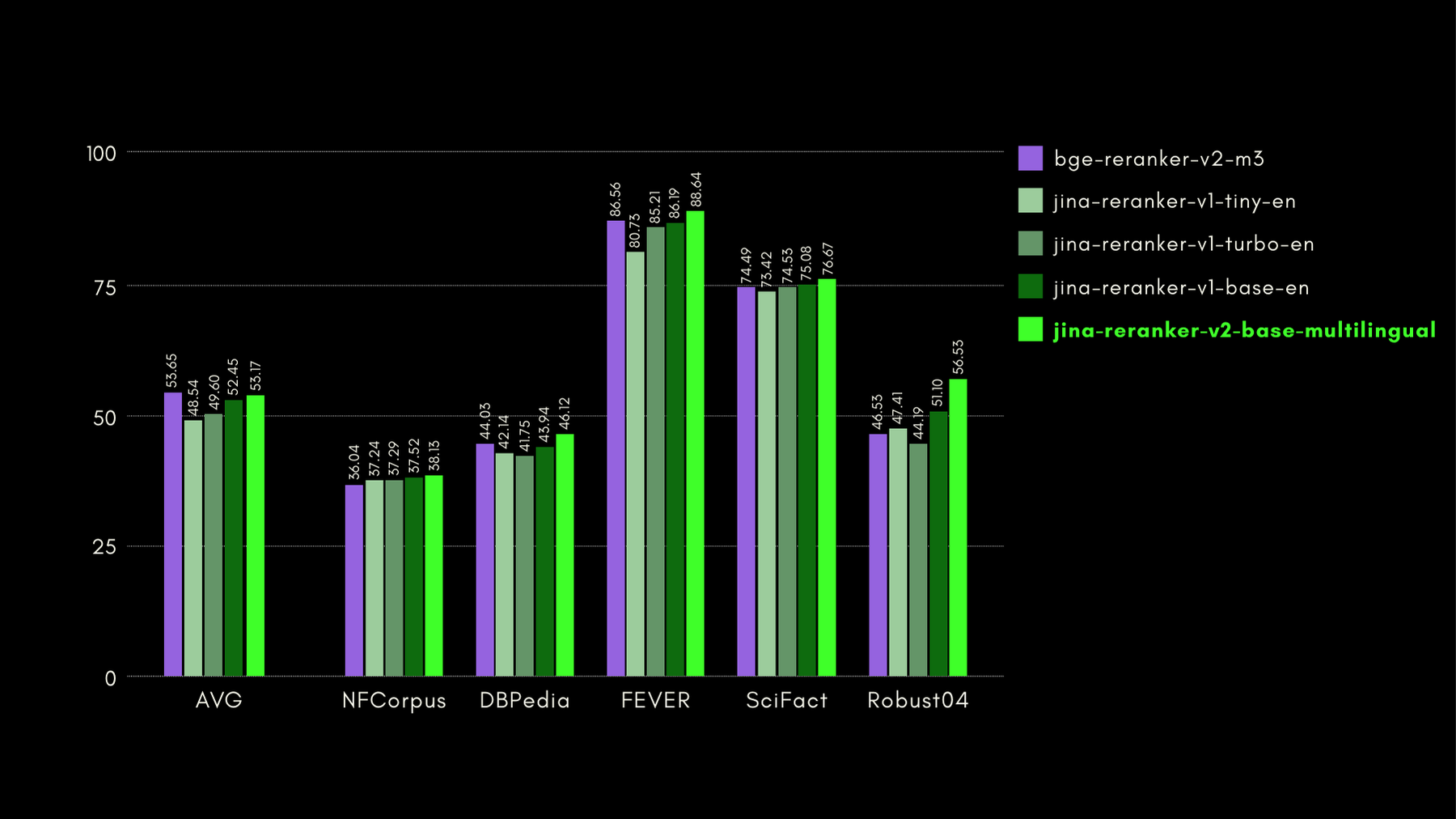

BEIR: Heterogeneous Benchmark on Diverse IR Tasks

This open-source repository contains a retrieval benchmark for many languages, but we only focus on the English-language tasks. These consist of 17 datasets, without any training data, and the focus of these datasets is on evaluating retrieval accuracy of neural or lexical retrievers.

In the below graph, we report NDCG@10 for the BEIR with each included reranker. The results on BEIR clearly show that the newly-introduced multilingual capabilities of jina-reranker-v2-base-multilingual don't compromise its English-language retrieval capabilities, which are, moreover, significantly improved over jina-reranker-v1-base-en.

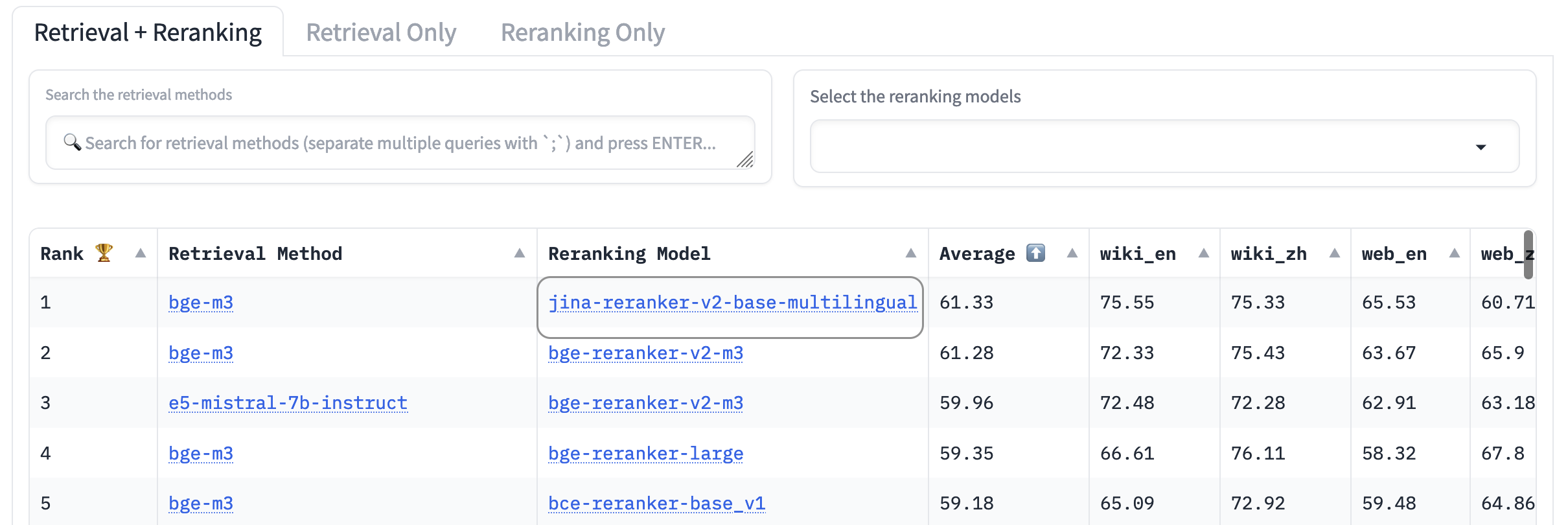

AirBench: Automated Heterogeneous IR Benchmark

We co-created and published the AirBench benchmark for RAG systems, together with BAAI. This benchmark uses automatically-generated synthetic data for custom domains and tasks, without publicly releasing the ground truth so that the benchmarked models have no chance to overfit the dataset.

At time of writing, jina-reranker-v2-base-multilingual outperforms every other included reranker model, nabbing first place on the leaderboard.

jina-reranker-v2-base-multilingual amongst reranking modelsRecap of Tooling-Agents: Teaching LLMs To Use Tools

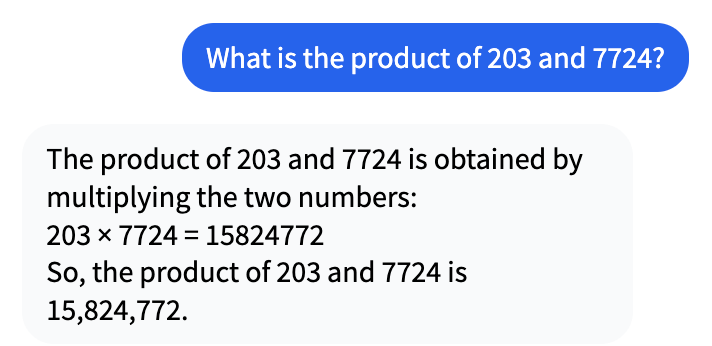

Since the big AI boom started a few years ago, people have seen how AI models under-perform at things computers are supposed to be good at. For example, consider this conversation with Mistral-7b-Instruct-v0.1:

This might look right at first glance, but actually 203 times 7724 is 1,567,972.

So why does the LLM get it wrong by a factor of over ten? It is because LLMs aren’t trained to do math or any other kind of reasoning, and lacking any internal recursion all but guarantees that they can’t solve complex math problems. They’re trained to say things or do some other task that is not inherently precise.

LLMs are happy to hallucinate answers though. From its perspective, 15,824,772 is a perfectly plausible answer to 204 × 7,724. It’s just that it’s totally wrong.

Agentic RAG changes the role of generative LLMs from what they’re bad at — thinking and knowing things — to what they’re good at: Reading comprehension and synthesizing information into natural language. Instead of just generating an answer, RAG finds information relevant to answering your request in whatever data sources are open to it and presents them to the language model. Its job isn't to make up an answer for you, but to present answers found by a different system in a natural and responsive form.

We've trained Jina Reranker v2 to be sensitive to SQL database schemas and function-calling. This needs a different kind of semantics than conventional text retrieval. It must be task- and code-aware, and we've trained our reranker specifically for this functionality.

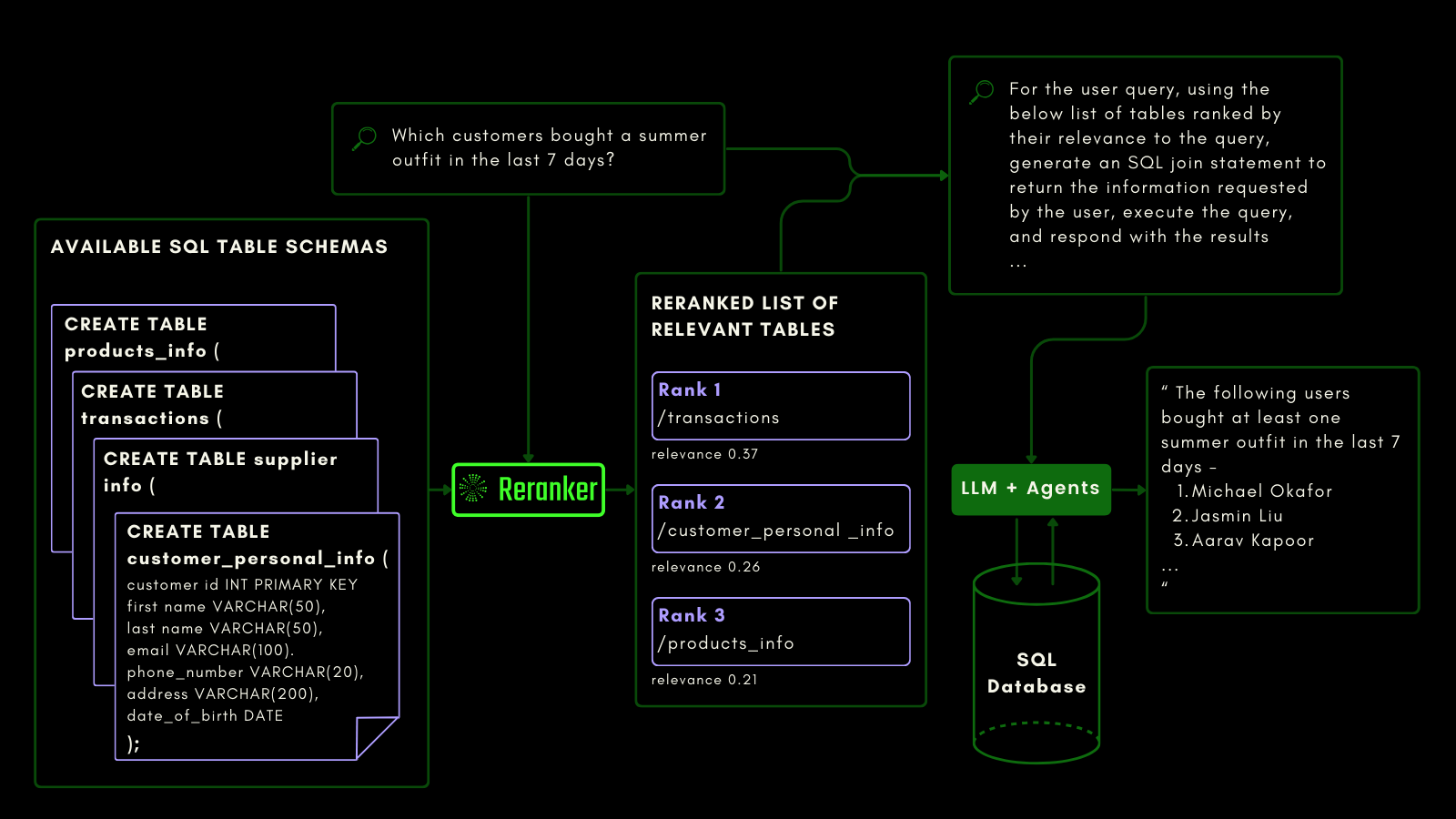

Jina Reranker v2 on Structured Data Querying

While embedding and reranker models already treat unstructured data as first-class citizens, support for structured tabular data is still lacking in most models.

Jina Reranker v2 understands the downstream intent to query a source of structured databases, such as MySQL or MongoDB, and assigns the correct relevance score to a structured table schema, given an input query.

You can see that below, where the reranker retrieves the most relevant tables before an LLM is prompted to generate an SQL query from a natural language query:

We evaluated the querying-aware capabilities using the NSText2SQL dataset benchmark. We extract, from the “instruction” column of the original dataset, instructions written in natural language, and the corresponding table schema.

The graph below compares, using recall@3, how successful reranker models are in ranking the correct table schema corresponding to a natural language query.

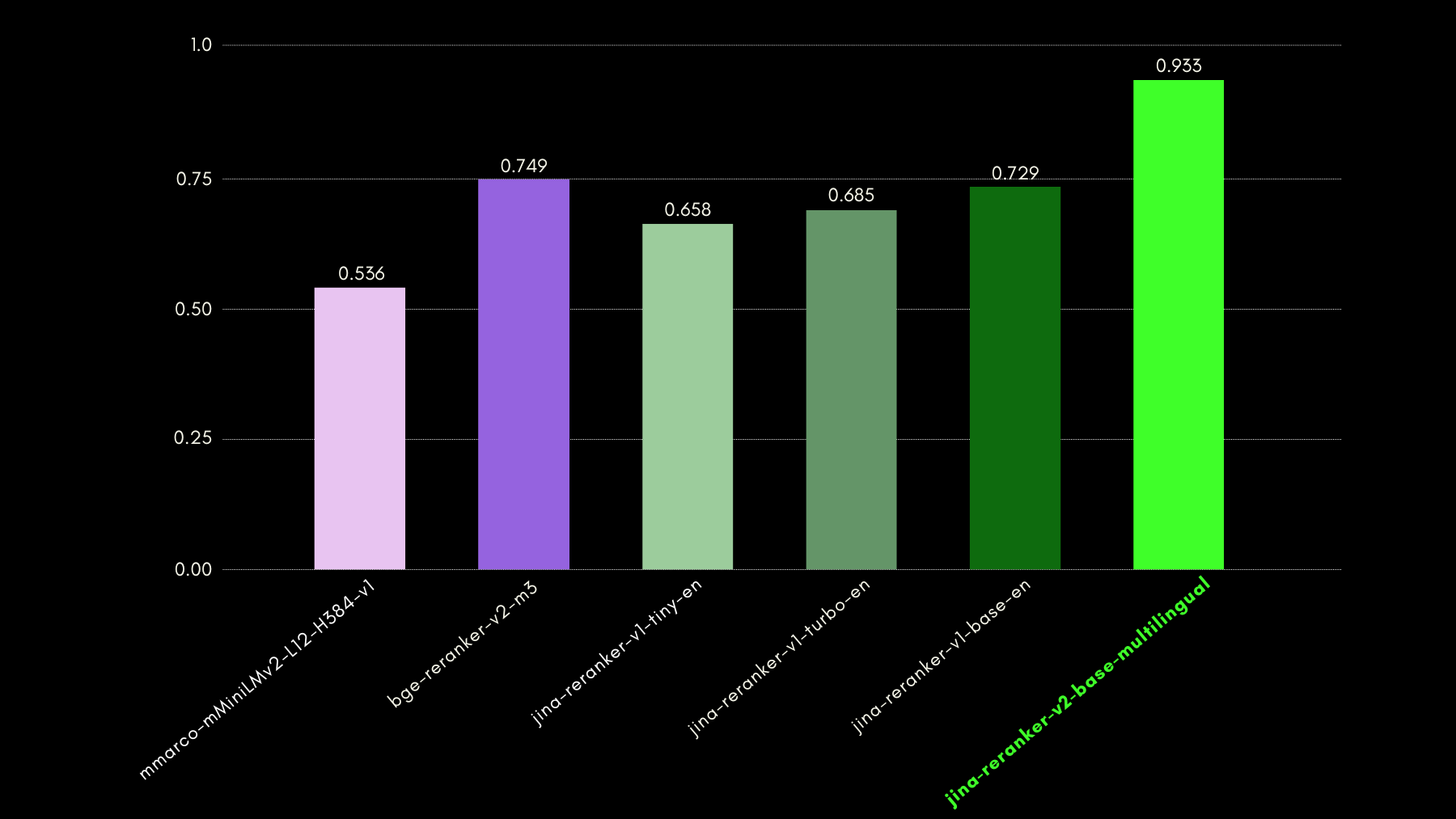

Jina Reranker v2 on Function Calling

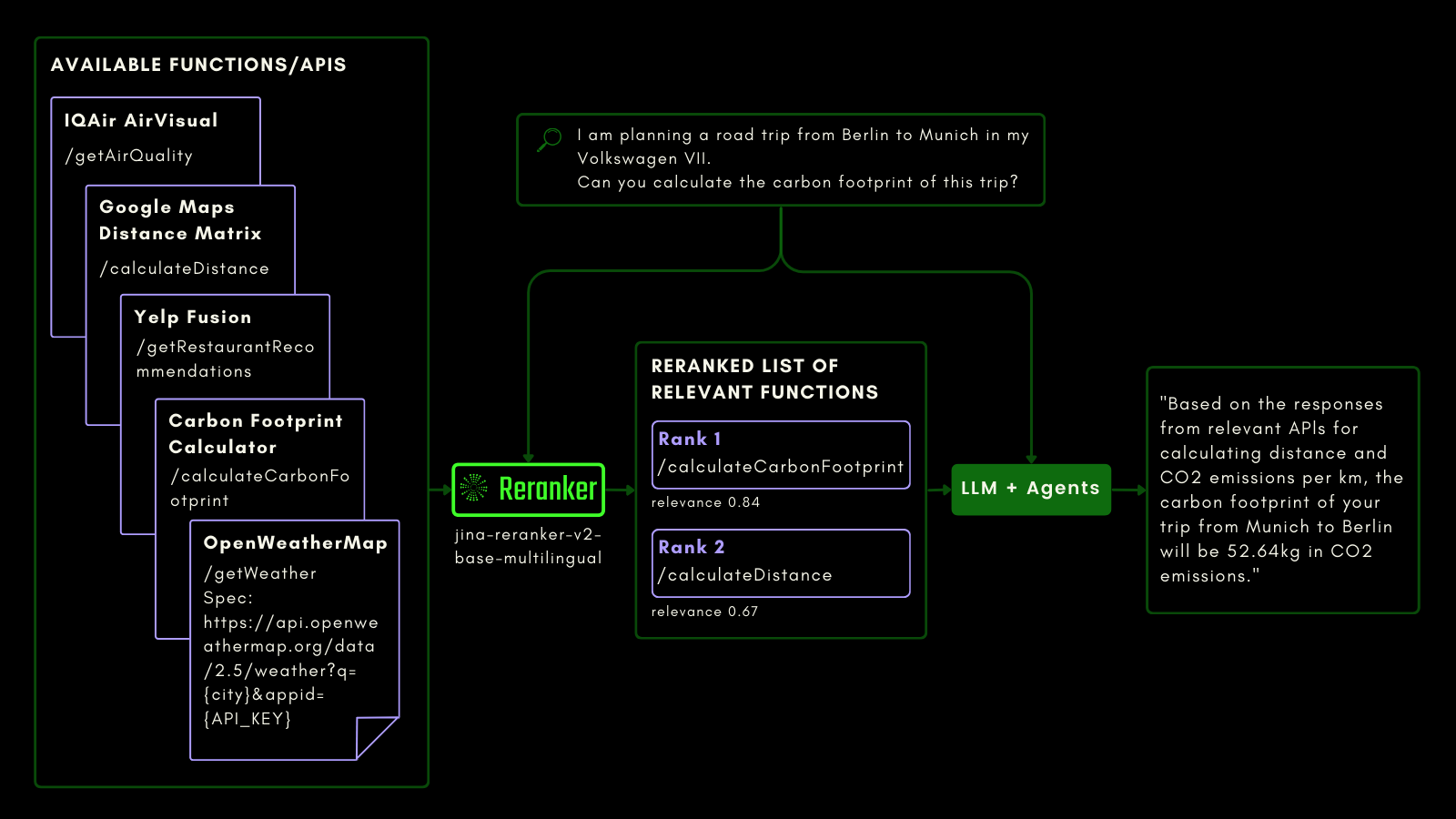

Just like querying an SQL table, you can use agentic RAG to invoke external tools. With that in mind, we integrated function calling into Jina Reranker v2, letting it understand your intent for external functions and assigning relevance scores to function specifications accordingly.

The schematic below explains (with an example) how LLMs can use Reranker to improve function-calling capabilities and, ultimately, the agentic AI user experience.

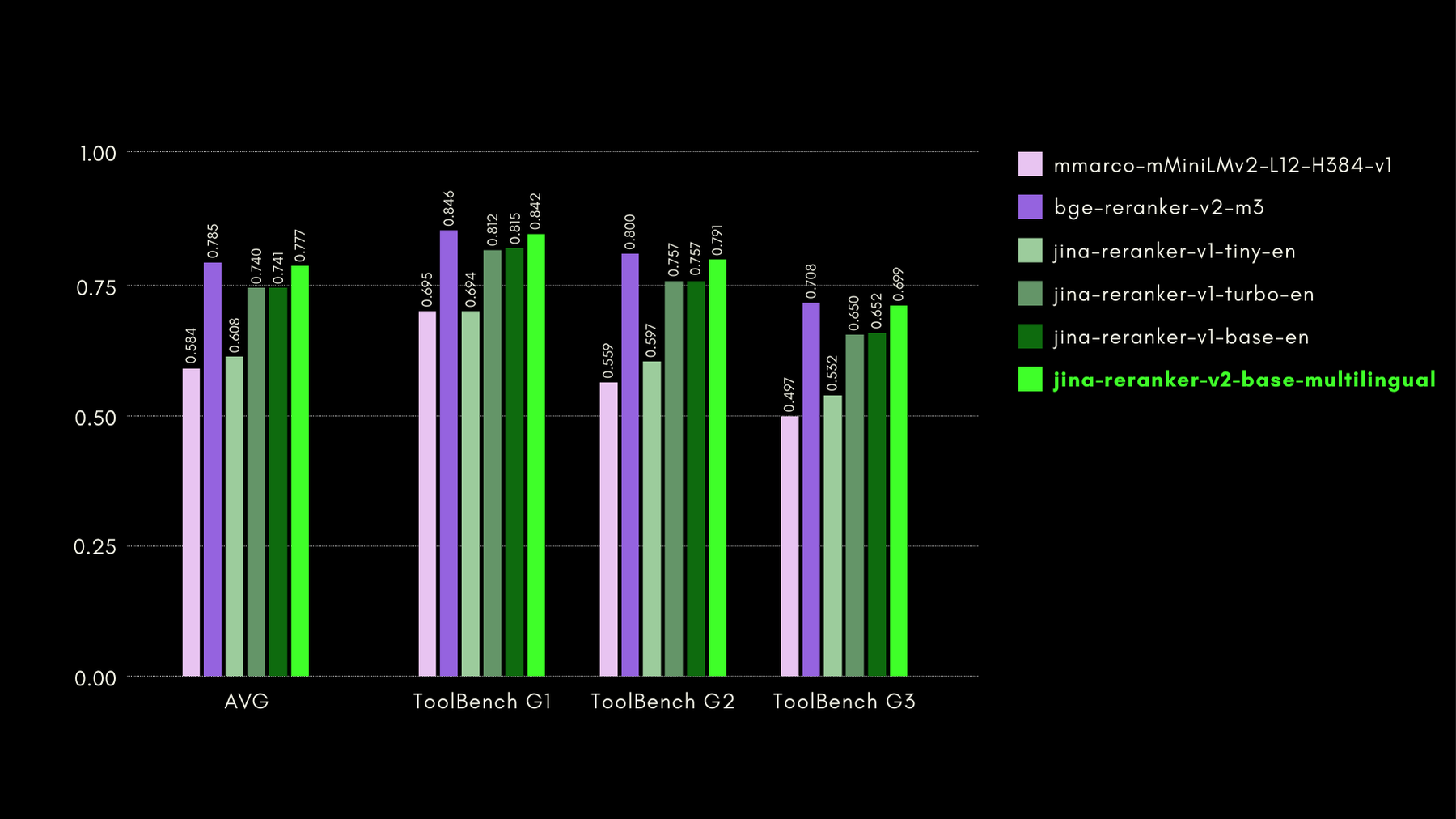

We evaluated function-aware capabilities with the ToolBench benchmark. The benchmark collects over 16 thousand public APIs and corresponding synthetically-generated instructions for using them in single and multi-API settings.

Here are the results (recall@3 metric) compared to other reranker models:

As we will also show in the later sections, the almost state-of-the-art performance of jina-reranker-v2-base-multilingual comes with the benefit of being half the size of bge-reranker-v2-m3 and almost 15 times faster.

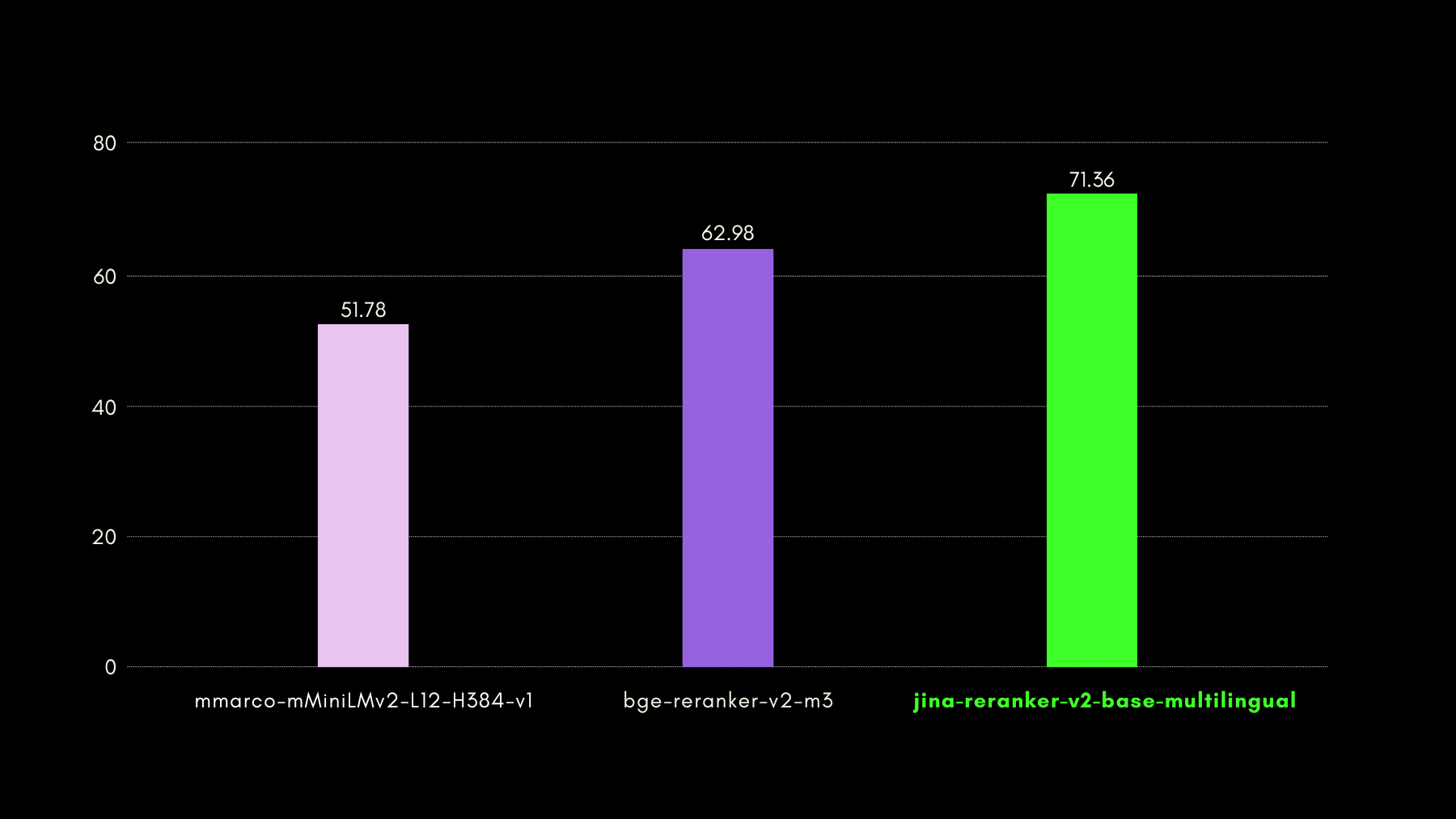

Jina Reranker v2 on Code Retrieval

Jina Reranker v2, as well as being trained in function calling and structured data querying, also improves code retrieval compared to competing models of similar size. We evaluated its code retrieval capabilities using the CodeSearchNet benchmark. The benchmark is a combination of queries in docstring and natural language formats, with labelled code-segments relevant to the queries.

Here are the results, using MRR@10, compared to other reranker models:

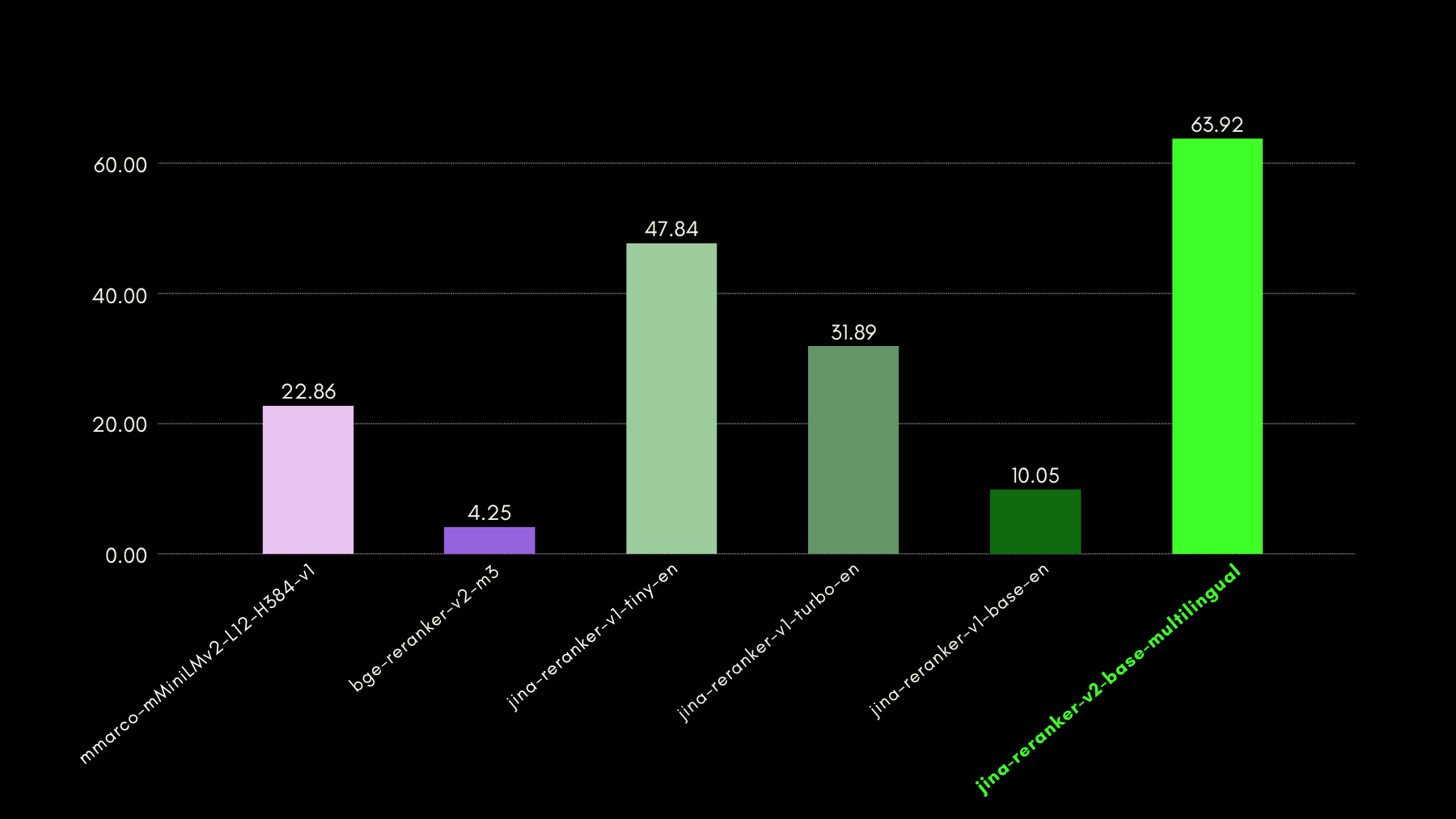

Ultra Fast Inference with Jina Reranker v2

While cross-encoder-style neural rerankers excel at predicting a retrieved document's relevance, they offer slower inference than embedding models. Namely, comparing a query to n documents one-by-one is far slower than HNSW or any other fast retrieval method in most vector databases. We fixed this slowness with Jina Reranker v2.

- Our unique training insights (described in the following section) resulted in our model reaching state-of-the-art performance in accuracy with only 278M parameters. Compared to, say,

bge-reranker-v2-m3, with 567M parameters, Jina Reranker v2 is only half the size. This reduction is the first reason for improved throughput (documents processed per 50ms). - Even with a comparable model size, Jina Reranker v2 boasts 6x the throughput of our previous state-of-the-art Jina Reranker v1 model for English. This is because we implemented Jina Reranker v2 with Flash Attention 2, which introduces memory and computational optimizations in the attention layer of transformer-based models.

You can see the outcome of the above steps, in terms of the throughput performance of Jina Reranker v2:

How We Trained Jina Reranker v2

We trainedjina-reranker-v2-base-multilingual in four stages:

- Preparation with English Data: We prepared the first version of the model by training a backbone model with only English-language data, including pairs (contrastive training) or triplets (query, correct response, wrong response), query-function schema pairs and query-table schema pairs.

- Addition of Cross-lingual Data: In the next stage, we added cross-lingual pairs and triplets datasets, to improve the backbone model's multilingual abilities on retrieval tasks, specifically.

- Addition of all Multilingual Data: At this stage, we focused training mostly on ensuring the model sees the largest possible amount of our data. We fine-tuned the model checkpoint from the second stage with all pairs and triplet datasets, from over 100 low- and high-resource languages.

- Fine-Tuning with Mined Hard-Negatives: After observing the reranking performance from the third stage, we fine-tuned the model by adding more triplet data with specifically more examples of hard-negatives for existing queries - responses that look superficially relevant to the query, but are in fact wrong.

This four-stage training approach was based on the insight that including functions and tabular schemas in the training process as early as possible allowed the model to be particularly aware of these use cases and learn to focus on the semantics of the candidate documents more than the language constructs.

Jina Reranker v2 in Practice

Via Our Reranker API

The fastest and easiest way to get started with Jina Reranker v2 is to use Jina Reranker's API.

Head to the API section of this page to integrate jina-reranker-v2-base-multilingual using the programming language of your choice.

Example 1: Ranking Function Calls

To rank the most relevant external function/tool, format the query and documents (function schemas) as shown below:

curl -X 'POST' \

'https://api.jina.ai/v1/rerank' \

-H 'accept: application/json' \

-H 'Authorization: Bearer <YOUR JINA AI TOKEN HERE>' \

-H 'Content-Type: application/json' \

-d '{

"model": "jina-reranker-v2-base-multilingual",

"query": "I am planning a road trip from Berlin to Munich in my Volkswagen VII. Can you calculate the carbon footprint of this trip?",

"documents": [

"{'\''Name'\'': '\''getWeather'\'', '\''Specification'\'': '\''Provides current weather information for a specified city'\'', '\''spec'\'': '\''https://api.openweathermap.org/data/2.5/weather?q={city}&appid={API_KEY}'\'', '\''example'\'': '\''https://api.openweathermap.org/data/2.5/weather?q=Berlin&appid=YOUR_API_KEY'\''}",

"{'\''Name'\'': '\''calculateDistance'\'', '\''Specification'\'': '\''Calculates the driving distance and time between multiple locations'\'', '\''spec'\'': '\''https://maps.googleapis.com/maps/api/distancematrix/json?origins={startCity}&destinations={endCity}&key={API_KEY}'\'', '\''example'\'': '\''https://maps.googleapis.com/maps/api/distancematrix/json?origins=Berlin&destinations=Munich&key=YOUR_API_KEY'\''}",

"{'\''Name'\'': '\''calculateCarbonFootprint'\'', '\''Specification'\'': '\''Estimates the carbon footprint for various activities, including transportation'\'', '\''spec'\'': '\''https://www.carboninterface.com/api/v1/estimates'\'', '\''example'\'': '\''{type: vehicle, distance: distance, vehicle_model_id: car}'\''}"

]

}'Remember to substitute <YOUR JINA AI TOKEN HERE> with your personal Reranker API token

You should get:

{

"model": "jina-reranker-v2-base-multilingual",

"usage": {

"total_tokens": 383,

"prompt_tokens": 383

},

"results": [

{

"index": 2,

"document": {

"text": "{'Name': 'calculateCarbonFootprint', 'Specification': 'Estimates the carbon footprint for various activities, including transportation', 'spec': 'https://www.carboninterface.com/api/v1/estimates', 'example': '{type: vehicle, distance: distance, vehicle_model_id: car}'}"

},

"relevance_score": 0.5422876477241516

},

{

"index": 1,

"document": {

"text": "{'Name': 'calculateDistance', 'Specification': 'Calculates the driving distance and time between multiple locations', 'spec': 'https://maps.googleapis.com/maps/api/distancematrix/json?origins={startCity}&destinations={endCity}&key={API_KEY}', 'example': 'https://maps.googleapis.com/maps/api/distancematrix/json?origins=Berlin&destinations=Munich&key=YOUR_API_KEY'}"

},

"relevance_score": 0.23283305764198303

},

{

"index": 0,

"document": {

"text": "{'Name': 'getWeather', 'Specification': 'Provides current weather information for a specified city', 'spec': 'https://api.openweathermap.org/data/2.5/weather?q={city}&appid={API_KEY}', 'example': 'https://api.openweathermap.org/data/2.5/weather?q=Berlin&appid=YOUR_API_KEY'}"

},

"relevance_score": 0.05033063143491745

}

]

}Example 2: Ranking SQL Queries

Likewise, to retrieve relevance scores for structured table schemas for your query, you can use the following example API call:

curl -X 'POST' \

'https://api.jina.ai/v1/rerank' \

-H 'accept: application/json' \

-H 'Authorization: Bearer <YOUR JINA AI TOKEN HERE>' \

-H 'Content-Type: application/json' \

-d '{

"model": "jina-reranker-v2-base-multilingual",

"query": "which customers bought a summer outfit in the past 7 days?",

"documents": [

"CREATE TABLE customer_personal_info (customer_id INT PRIMARY KEY, first_name VARCHAR(50), last_name VARCHAR(50));",

"CREATE TABLE supplier_company_info (supplier_id INT PRIMARY KEY, company_name VARCHAR(100), contact_name VARCHAR(50));",

"CREATE TABLE transactions (transaction_id INT PRIMARY KEY, customer_id INT, purchase_date DATE, FOREIGN KEY (customer_id) REFERENCES customer_personal_info(customer_id), product_id INT, FOREIGN KEY (product_id) REFERENCES products(product_id));",

"CREATE TABLE products (product_id INT PRIMARY KEY, product_name VARCHAR(100), season VARCHAR(50), supplier_id INT, FOREIGN KEY (supplier_id) REFERENCES supplier_company_info(supplier_id));"

]

}'The expected response is:

{

"model": "jina-reranker-v2-base-multilingual",

"usage": {

"total_tokens": 253,

"prompt_tokens": 253

},

"results": [

{

"index": 2,

"document": {

"text": "CREATE TABLE transactions (transaction_id INT PRIMARY KEY, customer_id INT, purchase_date DATE, FOREIGN KEY (customer_id) REFERENCES customer_personal_info(customer_id), product_id INT, FOREIGN KEY (product_id) REFERENCES products(product_id));"

},

"relevance_score": 0.2789437472820282

},

{

"index": 0,

"document": {

"text": "CREATE TABLE customer_personal_info (customer_id INT PRIMARY KEY, first_name VARCHAR(50), last_name VARCHAR(50));"

},

"relevance_score": 0.06477169692516327

},

{

"index": 3,

"document": {

"text": "CREATE TABLE products (product_id INT PRIMARY KEY, product_name VARCHAR(100), season VARCHAR(50), supplier_id INT, FOREIGN KEY (supplier_id) REFERENCES supplier_company_info(supplier_id));"

},

"relevance_score": 0.027742892503738403

},

{

"index": 1,

"document": {

"text": "CREATE TABLE supplier_company_info (supplier_id INT PRIMARY KEY, company_name VARCHAR(100), contact_name VARCHAR(50));"

},

"relevance_score": 0.025516605004668236

}

]

}Via RAG/LLM Frameworks

Jina Reranker’s existing integrations with LLM and RAG orchestration frameworks should already work out-of-the-box by using the model name jina-reranker-v2-base-multilingual. Refer to their respective documentation pages to learn more about how to integrate Jina Reranker v2 in your applications.

- Haystack by deepset: Jina Reranker v2 can be used with the JinaRanker class in Haystack:

from haystack import Document

from haystack_integrations.components.rankers.jina import JinaRanker

docs = [Document(content="Paris"), Document(content="Berlin")]

ranker = JinaRanker(model="jina-reranker-v2-base-multilingual", api_key="<YOUR JINA AI API KEY HERE>")

ranker.run(query="City in France", documents=docs, top_k=1)

- LlamaIndex: Jina Reranker v2 can be used as a JinaRerank node postprocessor module in by initializing it:

import os

from llama_index.postprocessor.jinaai_rerank import JinaRerank

jina_rerank = JinaRerank(model="jina-reranker-v2-base-multilingual", api_key="<YOUR JINA AI API KEY HERE>", top_n=1)

- Langchain: Make use of Jina Rerank integration to use Jina Reranker 2 in your existing application. The JinaRerank module should be initialized with the right model name:

from langchain_community.document_compressors import JinaRerank

reranker = JinaRerank(model="jina-reranker-v2-base-multilingual", jina_api_key="<YOUR JINA AI API KEY HERE>")

Via HuggingFace

We are also opening access (under CC-BY-NC-4.0) to jina-reranker-v2-base-multilingual model on Hugging Face for research and evaluation purposes.

To download and run the model from Hugging Face, install the transformers and einops libraries:

pip install transformers einops

pip install ninja

pip install flash-attn --no-build-isolation

Log in to your Hugging Face account through the Hugging Face CLI login using your Hugging Face access token:

huggingface-cli login --token <"HF-Access-Token">

Download the pre-trained model:

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained(

'jinaai/jina-reranker-v2-base-multilingual',

torch_dtype="auto",

trust_remote_code=True,

)

model.to('cuda') # or 'cpu' if no GPU is available

model.eval()

Define the query and the documents to be reranked:

query = "Organic skincare products for sensitive skin"

documents = [

"Organic skincare for sensitive skin with aloe vera and chamomile.",

"New makeup trends focus on bold colors and innovative techniques",

"Bio-Hautpflege für empfindliche Haut mit Aloe Vera und Kamille",

"Neue Make-up-Trends setzen auf kräftige Farben und innovative Techniken",

"Cuidado de la piel orgánico para piel sensible con aloe vera y manzanilla",

"Las nuevas tendencias de maquillaje se centran en colores vivos y técnicas innovadoras",

"针对敏感肌专门设计的天然有机护肤产品",

"新的化妆趋势注重鲜艳的颜色和创新的技巧",

"敏感肌のために特別に設計された天然有機スキンケア製品",

"新しいメイクのトレンドは鮮やかな色と革新的な技術に焦点を当てています",

]

Construct sentence pairs and compute the relevancy scores:

sentence_pairs = [[query, doc] for doc in documents]

scores = model.compute_score(sentence_pairs, max_length=1024)

The scores will be a list of floats, where each float represents the relevance score of the corresponding document to the query. Higher scores mean higher relevance.

Alternatively, use the rerank function to rerank large texts by automatically chunking the query and the documents based on max_query_length and max_length respectively. Each chunk is scored individually and scores of each chunk are then combined to produce the final reranking results:

results = model.rerank(

query,

documents,

max_query_length=512,

max_length=1024,

top_n=3

)

This function not only returns the relevancy score for each document, but also their content and position in the original document list.

Via Private Cloud Deployment

Pre-built packages for private deployment of Jina Reranker v2 AWS and Azure accounts can be soon found on our seller pages on AWS Marketplace and Azure Marketplace, respectively.

Key Takeaways of Jina Reranker v2

Jina Reranker v2 represents an important expansion of capabilities for search foundation:

- State-of-the-art retrieval using cross-encoding opens up a wide array of new application areas.

- Enhanced multilingual and cross-language functionality removes language barriers from your use cases.

- Best-in-class support for function calling, together with awareness of structured data querying, takes your agentic RAG capabilities to the next level of precision.

- Better retrieval of computer code and computer-formatted data can go far beyond just doing text information retrieval.

- Much faster document throughput ensures that, irrespective of the retrieval method, you can now rerank many more retrieved documents faster, and offload most of the fine-grained relevance calculation to

jina-reranker-v2-base-multilingual.

RAG systems are much more precise with Reranker v2, helping your existing information management solutions produce more and better actionable results. Cross-language support makes all this directly available to multi-national and multilingual enterprises, with an easy-to-use API at an affordable price.

By testing it with benchmarks derived from real use cases, you can see for yourself how Jina Reranker v2 maintains state-of-the art performance at tasks relevant to real business models, all in one AI model, keeping your costs down and your tech stack simpler.