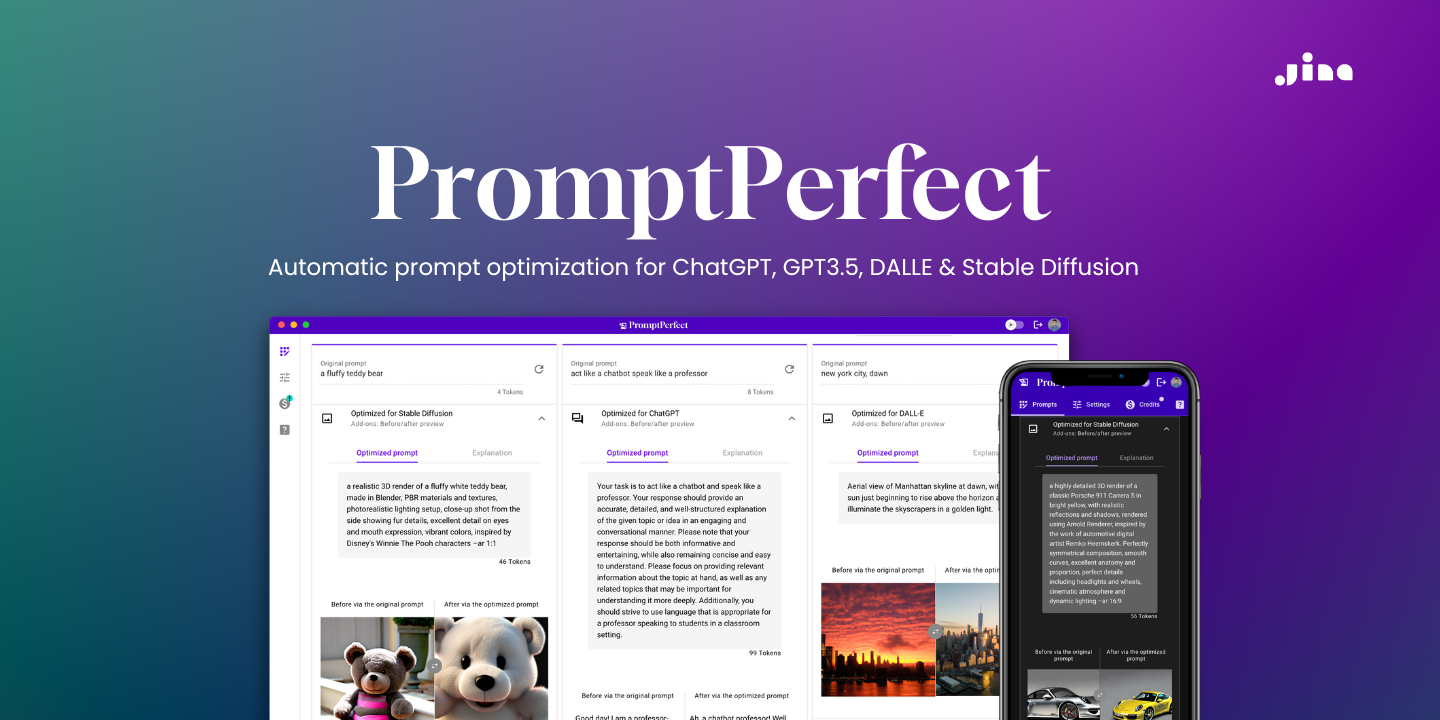

PromptPerfect: Automatic Prompt Engineering Done Right

Struggling with manual prompt engineering? Let PromptPerfect automate the process for you! Our cutting-edge tool automatically optimizes prompts for your language models with advanced machine learning techniques. Say goodbye to subpar AI-generated content and hello to prompt perfection!

Prompt engineering has become a hotly debated topic in Generative AI and LLMs. On the one hand, some experts see it as a "magic sauce" that can significantly enhance the quality of generative AI models. On the other hand, some view prompts as a "tumor" rather than a "cure" that reflects a lack of understanding about the underlying complexities of language and the world.

I keep seeing people argue that prompt engineering is a bug, not a feature, and will soon be made obsolete by future AI advances

— Simon Willison (@simonw) February 21, 2023

I very much disagree:https://t.co/0zQM8hVTMX

Yes, the need for prompt engineering is a sign of lack of understanding.

— Yann LeCun (@ylecun) February 28, 2023

No, scaling alone will not fix that. https://t.co/WV2aYyc2i8

The debate over prompt engineering is centered around the crucial role that prompts play in guiding language models (LLMs) or large models (LMs) to generate responses. While other numerical parameters can influence the output of these models, prompts are often seen as the most natural and intuitive way to provide guidance. Prompts can take various forms, ranging from simple keywords or phrases to complex and structured sentences. Proponents of prompt engineering argue that carefully designing prompts can help LLMs/LMs produce more coherent, relevant, and contextually appropriate responses. This is especially crucial in fields such as generative arts, chatbots, and virtual assistants, where the quality of the response can significantly impact user satisfaction. By using prompts effectively, one can improve the performance of LLMs/LMs and create more engaging and effective conversational experiences for users.

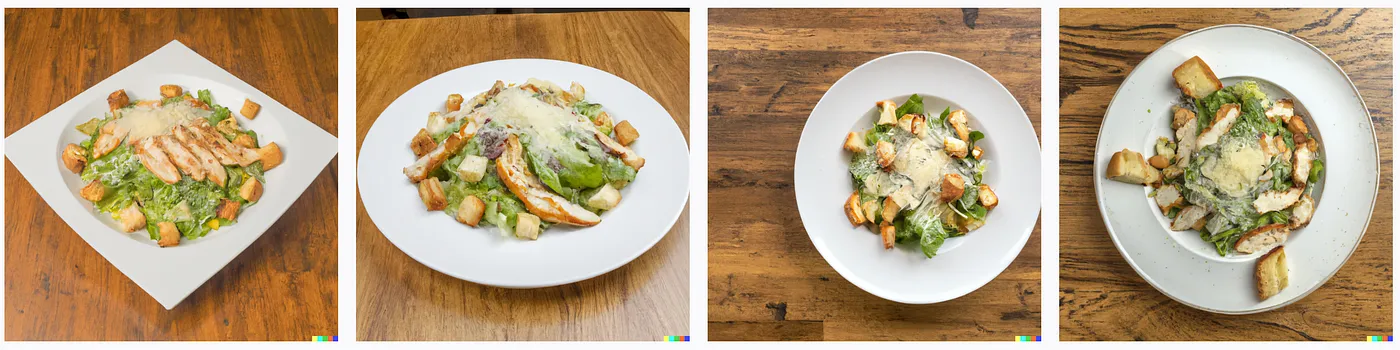

Image Generation on Stable Diffusion 1.5. Left: Burger, trending on Artstation; Right: one (center placed) double cheeseburger with tomato on a napkin seen from front (extra tomato) flag on top

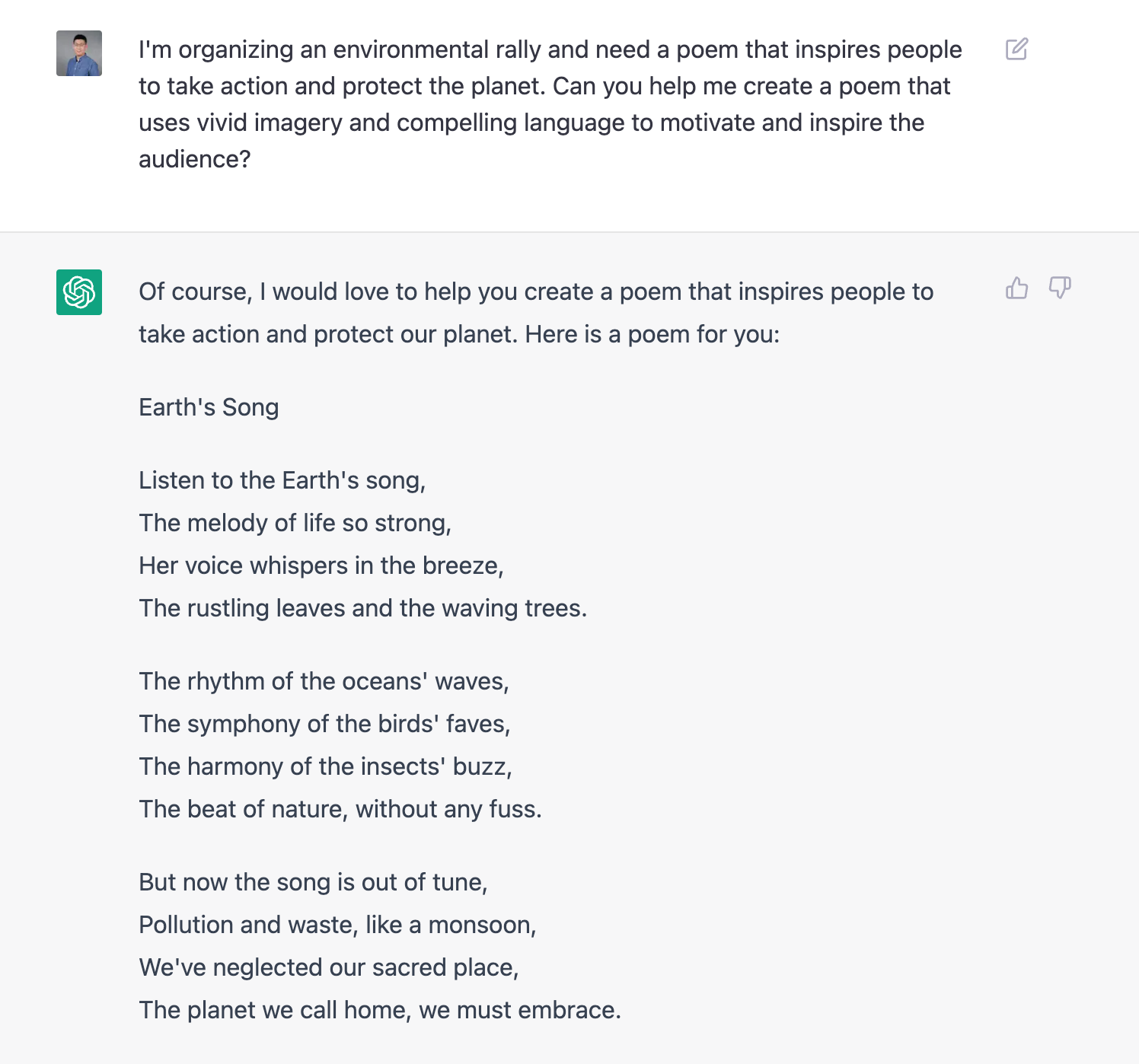

This left prompt is too vague and does not provide any specific information or guidance for ChatGPT to generate a unique and compelling poem about nature. It can result in a generic or clichéd poem that does not stand out.

Prompt engineering is necessary

In many ways, prompt engineering is like you as a human having a conversation with a machine. Like in the real world, when working with others, good communication skills can help you get things done faster and better; effective prompt engineering can help you achieve better results when communicating with an LLM.

When talking to someone, you need to use language that they understand, express yourself clearly and concisely, and adapt your communication style to the situation and the person you're talking to. Similarly, when designing prompts for an LLM, you need to use language that the model can understand, express your desired outcomes clearly and concisely, and adapt your prompt to the specific task and context.

However, communicating effectively with an LLM can be much more challenging than talking to a human. LLMs are incapable of understanding nuance, tone, or context in the same way humans do, which means that prompts need to be carefully designed to be unambiguous and easily understood by the model. Additionally, LLMs may have inherent biases or limitations in their language understanding, which can further complicate the prompt engineering process.

Manual prompt engineering is hard

Manual prompt engineering is a challenging and time-consuming process that requires a great deal of expertise and skill. In particular:

- Deep understanding of language: Manual prompt engineering requires a deep understanding of how language works, including its syntax, semantics, and pragmatics. Without this knowledge, it can be difficult to design prompts that effectively guide language models and produce accurate, coherent responses.

- Understanding of language models: Additionally, manual prompt engineering requires a good understanding of how language models work and the specific characteristics of the model being used. Expertise in one model may not necessarily transfer to another, so a thorough understanding of each model's unique features is essential.

- Vocabulary and pop culture: A broad vocabulary and familiarity with pop culture references can also be crucial in crafting effective prompts for certain tasks or applications. This allows the prompt designer to tailor their prompts to the specific context and audience, resulting in more engaging and effective responses.

- Challenges for non-native speakers: Finally, non-native English speakers may face additional challenges in understanding and manipulating the nuances of the English language. This can make it more difficult to design prompts that effectively guide language models and produce accurate, coherent responses.

Manual prompt engineering can be an iterative process that involves multiple rounds of experimentation, testing, and refinement to achieve optimal results. This can be time-consuming and require significant effort and resources.

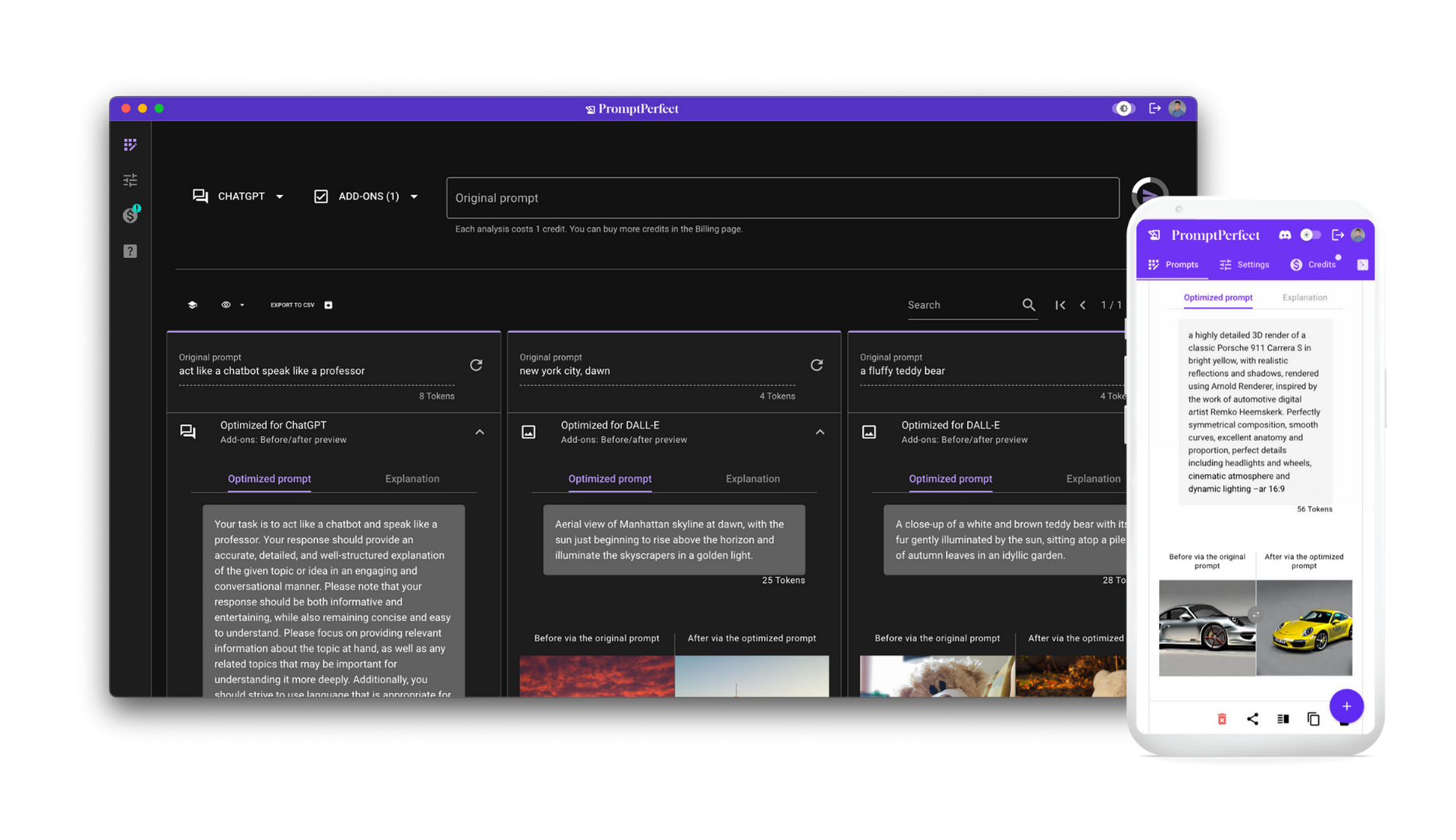

PromptPerfect is automatic prompt engineering

Now that we've explored the challenges and complexities of manual prompt engineering, you may be wondering if there's a better way to optimize prompts at scale. Fortunately, there is! Allow us to introduce you to PromptPerfect, a cutting-edge prompt optimizer that is designed to help you achieve prompt perfection with ease.

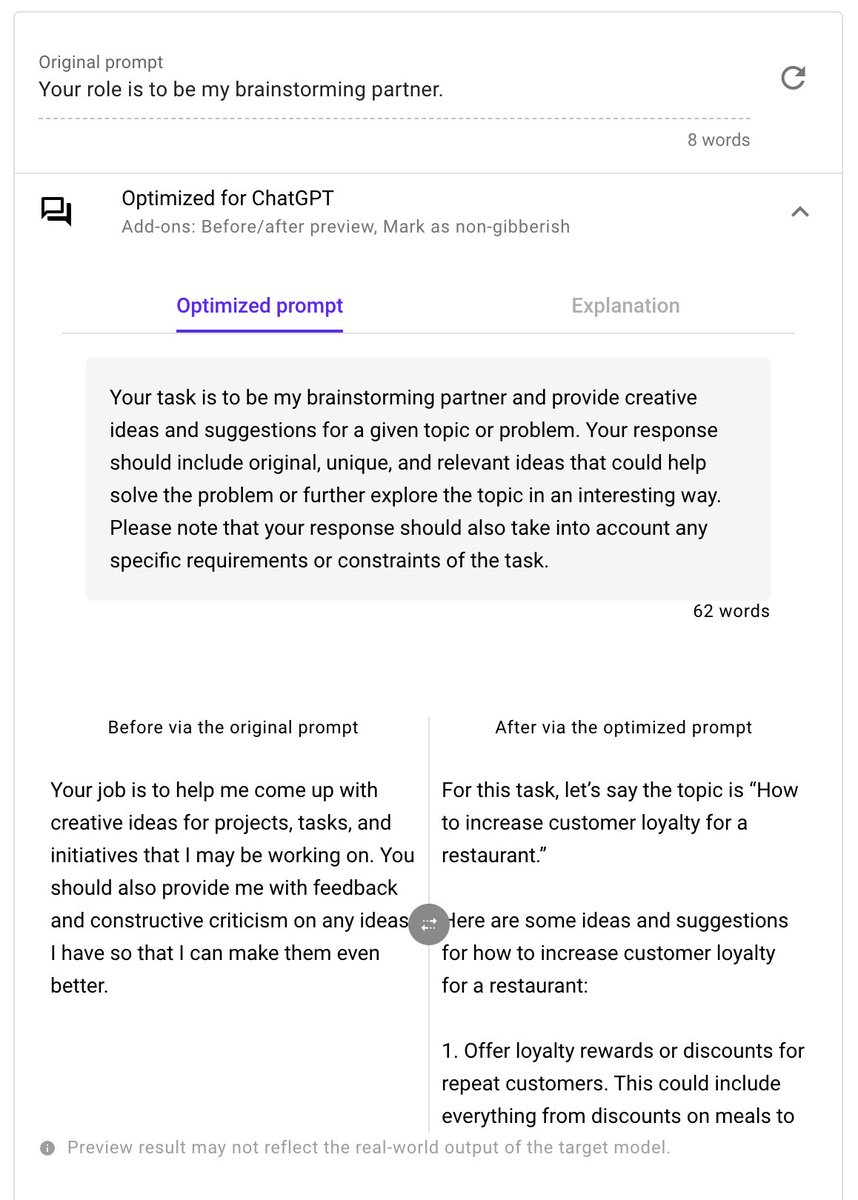

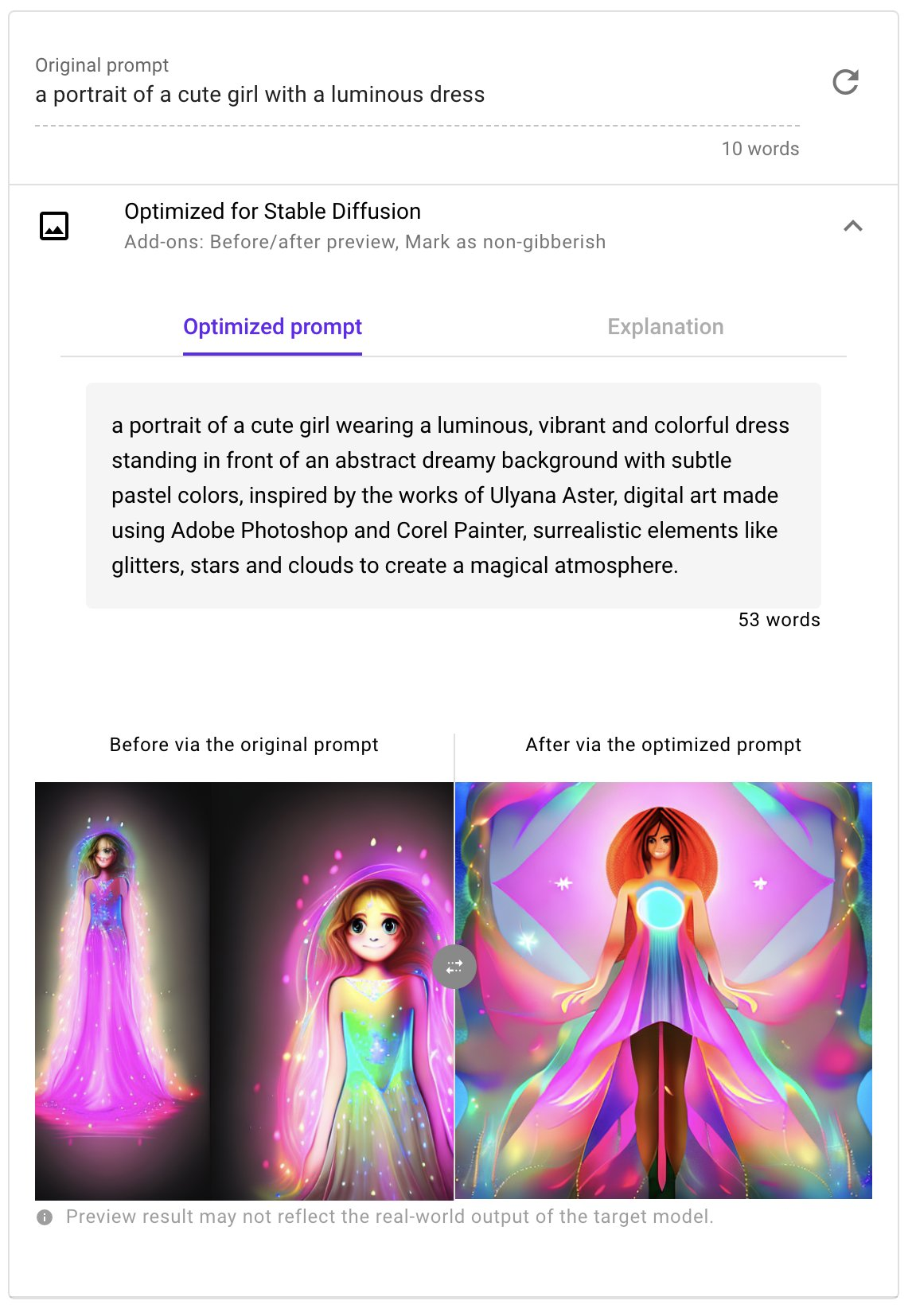

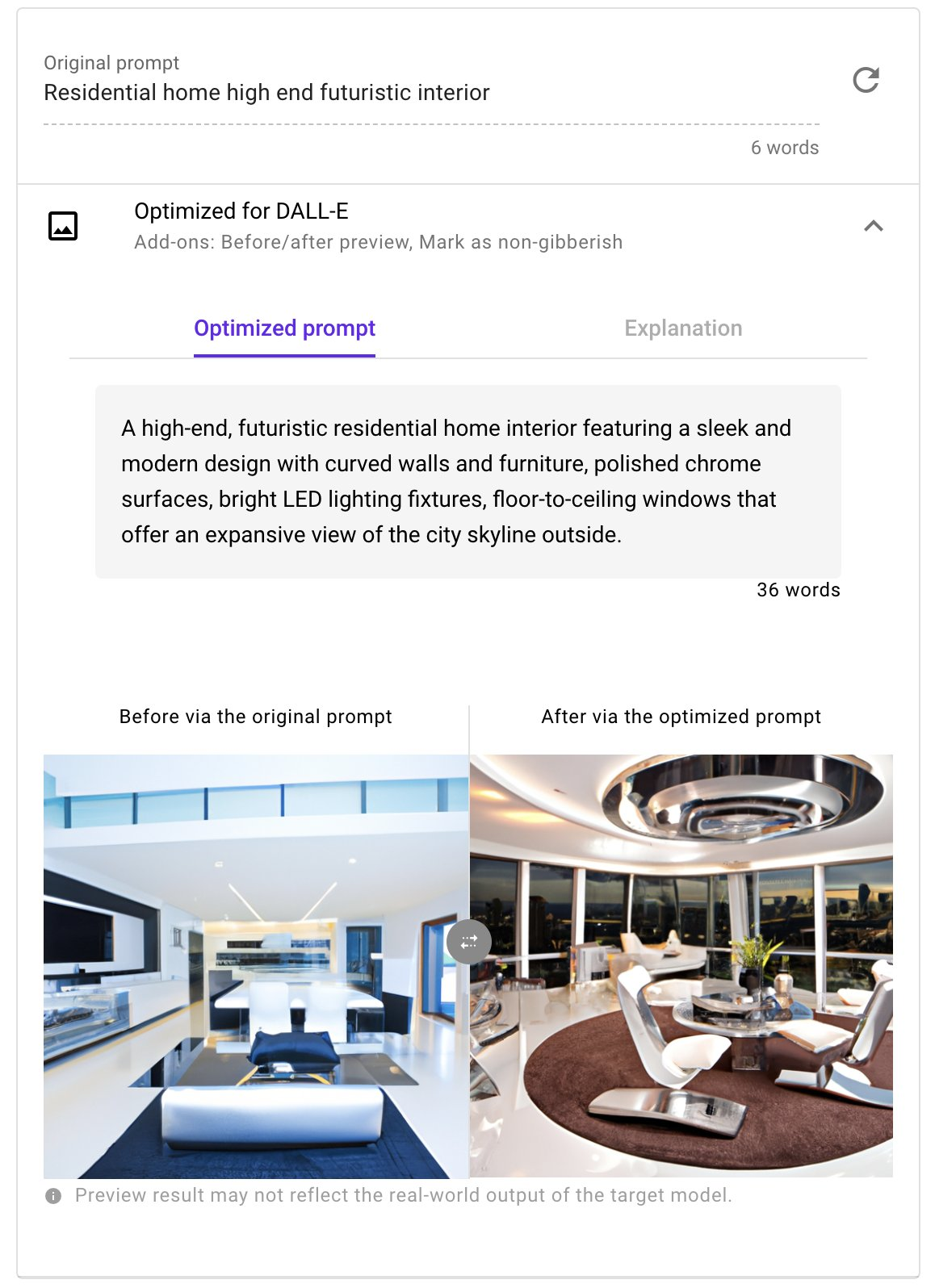

Our tool streamlines the prompt optimization process, automatically optimizing your prompts for a variety of popular language models, including ChatGPT, GPT-3.5, DALLE, and StableDiffusion.

With its intuitive interface and powerful features, PromptPerfect can help you unlock the full potential of your LLMs and LMs, delivering top-quality results every time. Whether you're a prompt engineer, content creator, or AI developer, PromptPerfect makes prompt optimization easy and accessible. Say goodbye to subpar AI-generated content and hello to prompt perfection with PromptPerfect!

Technologies behind PromptPerfect

To optimize prompts for , PromptPerfect uses two advanced machine learning techniques: reinforcement learning and structured in-context learning. For readers who have followed us since the SEO is dead article, then you know we are a big fan of in-context learning.

Reinforcement learning is like a coach that trains players to improve their skills over time. It fine-tunes a pretrained model on a small collection of manually engineered prompts and then trains a prompt policy network that explores optimized prompts of user inputs. For example, when optimizing DALLE/Stable Diffusion's prompts, the goal is to maximize a reward function that balances relevance and aesthetic scores of generated content, much like a coach trains a player to balance different aspects of their performance.

Structured in-context learning is like a teacher that helps a student learn from multiple examples. But instead of concatenating all demonstrations together, PromptPerfect divides a large number of demonstrations into multiple groups, which the language model independently encodes. This allows PromptPerfect to use many more examples to teach the model, resulting in more accurate and effective prompts. By combining these two techniques, PromptPerfect can optimize prompts for a wide range of language models with improved efficiency and accuracy, like a coach and teacher working together to train a top-performing athlete.

Elevate your prompts to perfection, now!

In this blog post, we've explored some of the challenges and complexities of manual prompt engineering, as well as the advanced machine learning techniques that PromptPerfect uses to automate this process. We've only scratched the surface of the technology behind PromptPerfect, and in future blog posts, we'll dive deeper into the details and explore the latest developments in prompt optimization.

So why wait? Start using PromptPerfect today and experience the power of optimized prompts for yourself. Say goodbye to subpar AI-generated content and hello to prompt perfection with PromptPerfect!