Beyond Sheer Scale: Navigating AI Alignment Odyssey

AI’s march forward isn't just about bigger models, but ensuring they harmonize with human values. Journey with us through the challenges, revelations, and aspirations at the forefront of AI safety and ethics.

In the grand canvas of technology, AI, with its large models, looms large. We're not just talking about computers that can play chess or identify photos; we're looking at machines that skirt the edge of what we might call 'thinking'. They can chat, they can craft text, they might even surprise you with a poem if you ask nicely. We're living in a world where our AI tools aren't just tools anymore. They're almost colleagues. There's a ballpark figure floating around, suggesting that these generative AI models could inject a few trillion dollars into the world economy annually. That's not pocket change.

But here's the hitch.

If a computer is becoming more like a human, then it's inheriting all our quirks. Our brilliance, our creativity, yes, but also our biases and blind spots. This isn’t just about AI becoming smart; it’s about AI becoming wise. This is what the tech heads call AI alignment or value alignment. In layman’s terms, it’s making sure AI doesn’t go off the rails, that it plays nicely in the sandbox.

The larger we make these models, the more room there is for errors, and not just typos, but grand faux pas. Imagine having all the knowledge of the internet – but also all its myths, prejudices, and midnight conspiracy theories. That's how these large models learn. They gobble up vast chunks of the internet and regurgitate it, warts and all.

The stakes? Without this alignment, our well-intentioned AI, given a slightly misdirected task, might churn out content that's harmful or even dangerous. It could inadvertently become the ally of someone with less than honorable intentions or sway someone vulnerable down a dark path. So, when we talk about AI alignment, we’re essentially talking about the guiding principles, the conscience, if you will, of AI. And in a world where AI might soon be as ubiquitous as smartphones, that's something we really ought to get right.

AI's Tightrope Walk: Values, Truth, and the Power Conundrum

Why does it matter if our digital friends get their facts straight, aren't carrying hidden prejudices, or know their own strength? Well, here's why:

- AI's "Reality Drift" - AI isn't a know-it-all. Sometimes, it takes a creative leap into the world of fiction. OpenAI’s CTO, Mira Murati, points out that our chatty AI pal, ChatGPT, occasionally dives headfirst into the world of make-believe. It can be a tad too confident about things that are just... well, not true. It's a tad like giving Shakespeare a typewriter and expecting every outcome to be historically accurate. Squaring this circle between an AI’s imaginative flights and hard facts is the new frontier.

- Mirror, Mirror, on the Wall - AI, at its core, reflects our world, warts and all. And sometimes, those reflections can be unflattering. According to OpenAI's chief, Sam Altman, expecting an AI to be entirely unbiased is like asking the internet to agree on the best pizza topping. The real puzzle here isn't just spotting these biases but knowing how to handle them when they inevitably pop up.

- AI's Unexpected Growth Spurts - Here's a fun thought: What if your AI woke up one day with a brand new trick up its sleeve that even its creators didn't expect? As models evolve, they might just surprise us, and not always in ways we'd appreciate. Some folks are losing sleep over the idea that these systems might, one day, develop their own ambitions—like a toddler realizing they can climb furniture, but far more concerning.

- The Double-Edged Sword - Every tool can be a weapon if you hold it right. As AI's capabilities expand, there's a growing risk of them being used nefariously, be it through clever manipulation or downright hijacking.

Aligning AI with human values isn't just some lofty philosophical goal. It's the groundwork to ensure that as AI steps into bigger shoes, it does so with grace, responsibility, and, most importantly, our best interests at heart.

Navigating the AI Morality Maze: A Guide for the Uninitiated

How does one teach a machine to behave? As it turns out, it's less about giving it a stern talking-to and more about sophisticated training techniques that ensure AI understands and respects human ethics. Let's dive in.

Guided Learning Through Human Touch

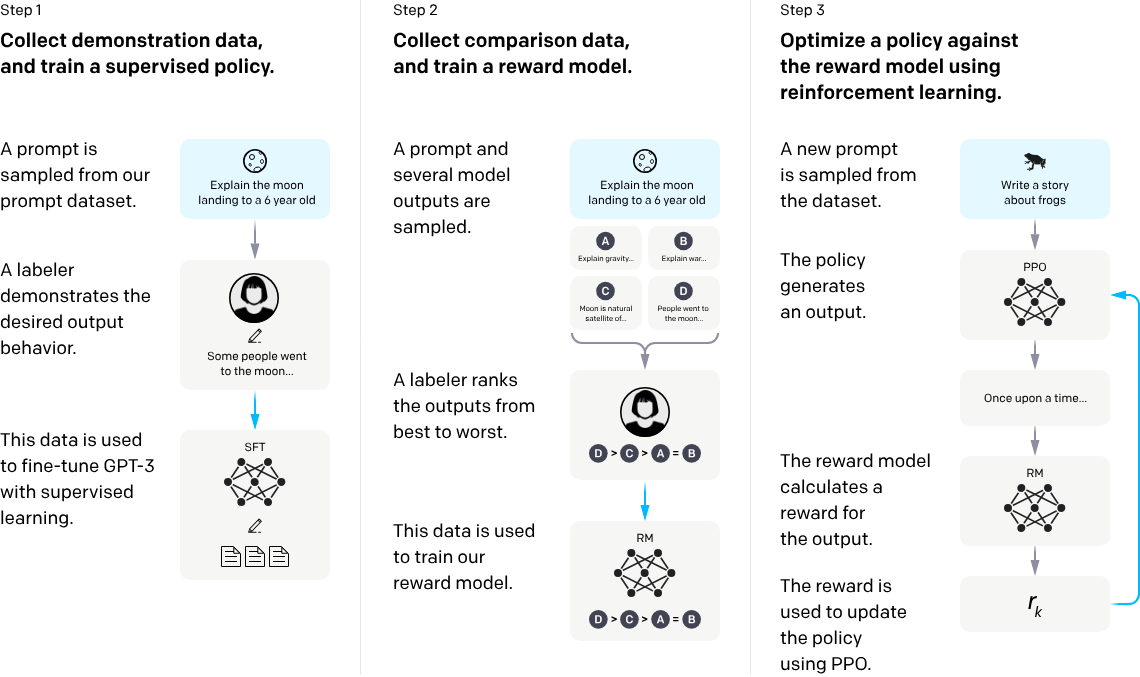

Think of reinforcement learning from human feedback (RLHF) as a kind of parenting for AI. Instead of letting AI figure things out by trial and error, humans intervene, nudging it towards desired outcomes. OpenAI's experiments back in 2017 shed light on how RLHF could sculpt AI behavior to sync with human preferences. This method essentially bridges the gap between AI's expansive generative prowess and what we'd call 'common sense'. It's a thumbs-up to AI when it does good and a gentle reminder when it missteps.

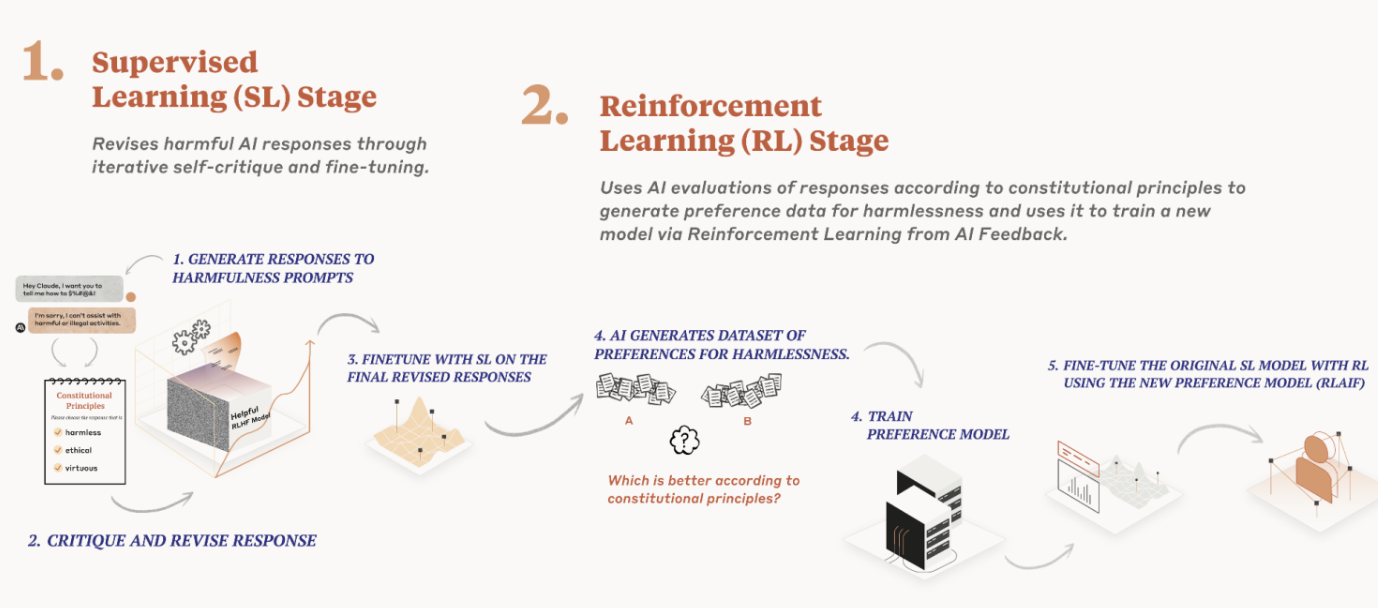

AI Policing Itself: The Constitutional Approach

Here's an ambitious idea: What if we could build an AI that monitors another AI? Instead of humans chasing after ever-growing models, we lean on the AI itself for some introspection. Anthropic, an AI safety firm, came up with this bright spark called 'Constitutional AI'. Imagine an AI sidekick that checks whether the main AI is sticking to a pre-defined set of rules, a sort of digital Magna Carta. Taking cues from human rights charters, the terms and conditions we scroll past, and even other tech guidelines, Anthropic designed a robust rulebook for their AI assistant, Claude. The end result? An AI that thinks twice, ensuring it's both useful and on its best behavior.

The Quartet of Best Practices

Harnessing AI's might while keeping it ethically in-check is a multifaceted challenge. If we break it down, it's a harmonious blend of proactive tweaks and reactive measures.

- Intervention at the Roots: The Training Data - The quirks of large models, whether they're weaving fictions (those AI 'hallucinations') or reflecting biases, often trace back to their training data. So, the first port of call is to roll up our sleeves and get stuck into the data itself. Recording training data to ensure its diversity and representativeness, combing through it to spot and rectify biases, and even crafting specialized datasets dedicated to value alignment are all steps in the playbook. It's a bit like ensuring the foundation of a building is rock solid before adding any layers.

- Adversarial Showdowns: The Red Team Gauntlet - Before letting our AI systems loose on the world, they go through a rigorous stress test. This is where adversarial testing or 'red teaming' comes in. In simpler terms, this involves inviting experts (both internal and external) to throw everything but the kitchen sink at the model, all to uncover any cracks or vulnerabilities. For instance, before GPT-4's grand entrance, OpenAI summoned over 50 scholars and experts from diverse fields to put it through its paces. Their mission? To probe the model with a gamut of challenging or potentially harmful queries, testing the waters for issues ranging from misinformation and harmful content to biases and sensitive information leakage.

- Content Gatekeepers: Filtering Models - It's one thing to train an AI, and quite another to ensure it doesn't blurt out anything it shouldn't. That's where specialized filtering AI models, like the ones developed by OpenAI, come in handy. These vigilant models oversee both user input and AI outputs, singling out any content that might be stepping over the line.

- The Looking Glass: Advancing Model Interpretability - Transparency and comprehensibility in AI aren't just buzzwords; they're essential tools in our alignment toolkit. OpenAI, for instance, trained GPT-4 to self-reflect, penning automated explanations about its predecessor, GPT-2's neural behaviors, and even grading its introspection. Meanwhile, other researchers are tackling alignment by delving into mechanistic interpretability, peeling back the layers to comprehend the AI's inner machinations.

As we continue on this odyssey of aligning AI with our human values, it's an intricate dance of intervention, testing, filtering, and most crucially, understanding. It's about ensuring that as AI grows, it not only knows its strength but uses it wisely.

Traversing the AI Labyrinth: A Marathon, Not a Sprint

In the vast tapestry of AI research, the notion of 'value alignment' seems to gleam with particular significance. As we delve deeper, it's clear we're at a crossroads of ethics, technology, and perhaps a sprinkle of existential contemplation. Think of it as a bustling intersection, full of potential but not without its challenges.

The tech frontier is often marked by rapid leaps and bounds. But as we venture into aligning AI with human values, the question arises: do we select from a curated list of values, or should we stand back, squint, and discern a broader, collective societal heartbeat? The brightest minds in AI have made strides, yet landing on a universally accepted set of 'human values' feels akin to trying to bottle a cloud. It’s intangible, elusive, and endlessly fascinating.

And let’s not forget the dizzying pace of AI evolution. It's not just progressing; it’s on a veritable sprint. Our ability to oversee and comprehend is being tested. The crux is this: how do we coalesce with entities whose computational depths may soon eclipse our own grasp? OpenAI's recent gambit, the formation of a 'Superalignment' team, is emblematic of this challenge. It’s about making AI introspective, having it tackle its own alignment issues.

Our ultimate goal extends beyond mere regulation. We aim to synchronize these digital marvels with humanity’s loftiest aspirations. The collective efforts of technologists, policymakers, academics, and visionaries will dictate our trajectory. It’s about ensuring that as AI reaches its zenith, it resonates with our shared ethos. This journey with AI is less a destination and more a shared adventure, brimming with challenges and wonders alike. The finish line is still a speck on the horizon.